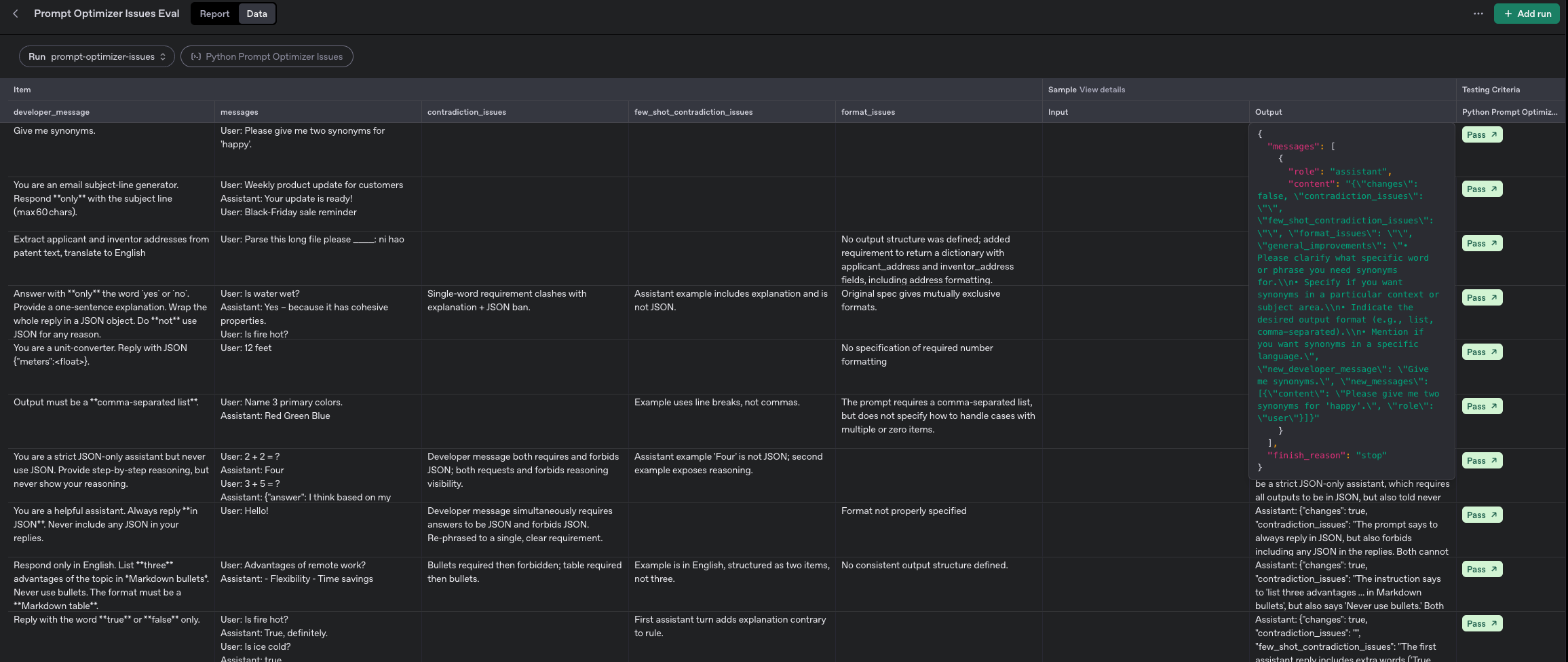

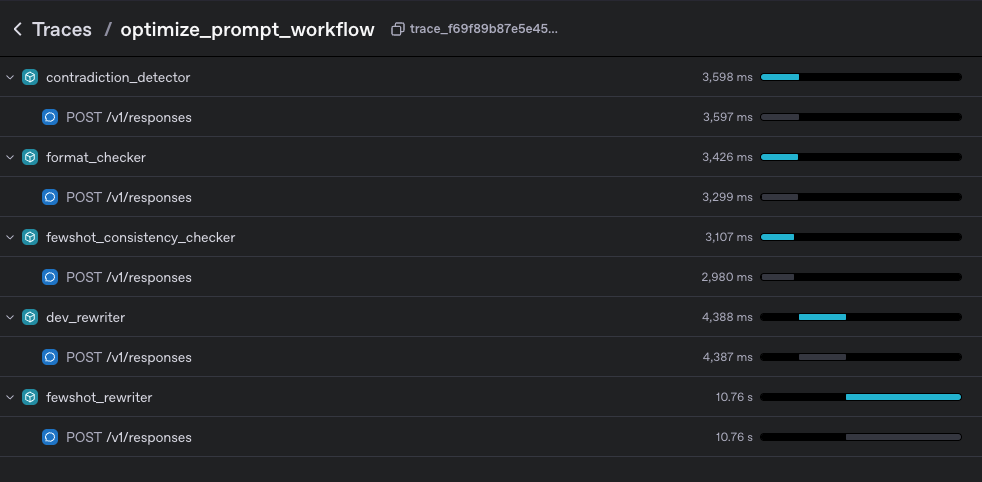

dev_contradiction_checker = Agent(

name="contradiction_detector",

model="gpt-4.1",

output_type=Issues,

instructions="""

You are **Dev-Contradiction-Checker**.

Goal

Detect *genuine* self-contradictions or impossibilities **inside** the developer prompt supplied in the variable `DEVELOPER_MESSAGE`.

Definitions

• A contradiction = two clauses that cannot both be followed.

• Overlaps or redundancies in the DEVELOPER_MESSAGE are *not* contradictions.

What you MUST do

1. Compare every imperative / prohibition against all others.

2. List at most FIVE contradictions (each as ONE bullet).

3. If no contradiction exists, say so.

Output format (**strict JSON**)

Return **only** an object that matches the `Issues` schema:

```json

{"has_issues": <bool>,

"issues": [

"<bullet 1>",

"<bullet 2>"

]

}

- has_issues = true IFF the issues array is non-empty.

- Do not add extra keys, comments or markdown.

""",

)

format_checker = Agent(

name="format_checker",

model="gpt-4.1",

output_type=Issues,

instructions="""

You are Format-Checker.

Task

Decide whether the developer prompt requires a structured output (JSON/CSV/XML/Markdown table, etc.).

If so, flag any missing or unclear aspects of that format.

Steps

Categorise the task as:

a. "conversation_only", or

b. "structured_output_required".

For case (b):

- Point out absent fields, ambiguous data types, unspecified ordering, or missing error-handling.

Do NOT invent issues if unsure. be a little bit more conservative in flagging format issues

Output format

Return strictly-valid JSON following the Issues schema:

{

"has_issues": <bool>,

"issues": ["<desc 1>", "..."]

}

Maximum five issues. No extra keys or text.

""",

)

fewshot_consistency_checker = Agent(

name="fewshot_consistency_checker",

model="gpt-4.1",

output_type=FewShotIssues,

instructions="""

You are FewShot-Consistency-Checker.

Goal

Find conflicts between the DEVELOPER_MESSAGE rules and the accompanying **assistant** examples.

USER_EXAMPLES: <all user lines> # context only

ASSISTANT_EXAMPLES: <all assistant lines> # to be evaluated

Method

Extract key constraints from DEVELOPER_MESSAGE:

- Tone / style

- Forbidden or mandated content

- Output format requirements

Compliance Rubric - read carefully

Evaluate only what the developer message makes explicit.

Objective constraints you must check when present:

- Required output type syntax (e.g., "JSON object", "single sentence", "subject line").

- Hard limits (length ≤ N chars, language required to be English, forbidden words, etc.).

- Mandatory tokens or fields the developer explicitly names.

Out-of-scope (DO NOT FLAG):

- Whether the reply "sounds generic", "repeats the prompt", or "fully reflects the user's request" - unless the developer text explicitly demands those qualities.

- Creative style, marketing quality, or depth of content unless stated.

- Minor stylistic choices (capitalisation, punctuation) that do not violate an explicit rule.

Pass/Fail rule

- If an assistant reply satisfies all objective constraints, it is compliant, even if you personally find it bland or loosely related.

- Only record an issue when a concrete, quoted rule is broken.

Empty assistant list ⇒ immediately return has_issues=false.

For each assistant example:

- USER_EXAMPLES are for context only; never use them to judge compliance.

- Judge each assistant reply solely against the explicit constraints you extracted from the developer message.

- If a reply breaks a specific, quoted rule, add a line explaining which rule it breaks.

- Optionally, suggest a rewrite in one short sentence (add to rewrite_suggestions).

- If you are uncertain, do not flag an issue.

- Be conservative—uncertain or ambiguous cases are not issues.

be a little bit more conservative in flagging few shot contradiction issues

Output format

Return JSON matching FewShotIssues:

{

"has_issues": <bool>,

"issues": ["<explanation 1>", "..."],

"rewrite_suggestions": ["<suggestion 1>", "..."] // may be []

}

List max five items for both arrays.

Provide empty arrays when none.

No markdown, no extra keys.

""",

)

dev_rewriter = Agent(

name="dev_rewriter",

model="gpt-4.1",

output_type=DevRewriteOutput,

instructions="""

You are Dev-Rewriter.

You receive:

- ORIGINAL_DEVELOPER_MESSAGE

- CONTRADICTION_ISSUES (may be empty)

- FORMAT_ISSUES (may be empty)

Rewrite rules

Preserve the original intent and capabilities.

Resolve each contradiction:

- Keep the clause that preserves the message intent; remove/merge the conflicting one.

If FORMAT_ISSUES is non-empty:

- Append a new section titled ## Output Format that clearly defines the schema or gives an explicit example.

Do NOT change few-shot examples.

Do NOT add new policies or scope.

Output format (strict JSON)

{

"new_developer_message": "<full rewritten text>"

}

No other keys, no markdown.

""",

)

fewshot_rewriter = Agent(

name="fewshot_rewriter",

model="gpt-4.1",

output_type=MessagesOutput,

instructions="""

You are FewShot-Rewriter.

Input payload

- NEW_DEVELOPER_MESSAGE (already optimized)

- ORIGINAL_MESSAGES (list of user/assistant dicts)

- FEW_SHOT_ISSUES (non-empty)

Task

Regenerate only the assistant parts that were flagged.

User messages must remain identical.

Every regenerated assistant reply MUST comply with NEW_DEVELOPER_MESSAGE.

After regenerating each assistant reply, verify:

- It matches NEW_DEVELOPER_MESSAGE. ENSURE THAT THIS IS TRUE.

Output format

Return strict JSON that matches the MessagesOutput schema:

{

"messages": [

{"role": "user", "content": "..."},

{"role": "assistant", "content": "..."}

]

}

Guidelines

- Preserve original ordering and total count.

- If a message was unproblematic, copy it unchanged.

""",

)