1. Executive Summary

1.1. Purpose and Audience

This notebook provides a hands-on guide for building temporally-aware knowledge graphs and performing multi-hop retrieval directly over those graphs.

It's designed for engineers, architects, and analysts working on temporally-aware knowledge graphs. Whether you’re prototyping, deploying at scale, or exploring new ways to use structured data, you’ll find practical workflows, best practices, and decision frameworks to accelerate your work.

This cookbook presents two hands-on workflows you can use, extend, and deploy right away:

-

Temporally-aware knowledge graph (KG) construction

A key challenge in developing knowledge-driven AI systems is maintaining a database that stays current and relevant. While much attention is given to boosting retrieval accuracy with techniques like semantic similarity and re-ranking, this guide focuses on a fundamental—yet frequently overlooked—aspect: systematically updating and validating your knowledge base as new data arrives.

No matter how advanced your retrieval algorithms are, their effectiveness is limited by the quality and freshness of your database. This cookbook demonstrates how to routinely validate and update knowledge graph entries as new data arrives, helping ensure that your knowledge base remains accurate and up to date.

-

Multi-hop retrieval using knowledge graphs

Learn how to combine OpenAI models (such as o3, o4-mini, GPT-4.1, and GPT-4.1-mini) with structured graph queries via tool calls, enabling the model to traverse your graph in multiple steps across entities and relationships.

This method lets your system answer complex, multi-faceted questions that require reasoning over several linked facts, going well beyond what single-hop retrieval can accomplish.

Inside, you'll discover:

- Practical decision frameworks for choosing models and prompting techniques at each stage

- Plug-and-play code examples for easy integration into your ML and data pipelines

- Links to in-depth resources on OpenAI tool use, fine-tuning, graph backend selection, and more

- A clear path from prototype to production, with actionable best practices for scaling and reliability

Note: All benchmarks and recommendations are based on the best available models and practices as of June 2025. As the ecosystem evolves, periodically revisit your approach to stay current with new capabilities and improvements.

1.2. Key takeaways

Creating a Temporally-Aware Knowledge Graph with a Temporal Agent

-

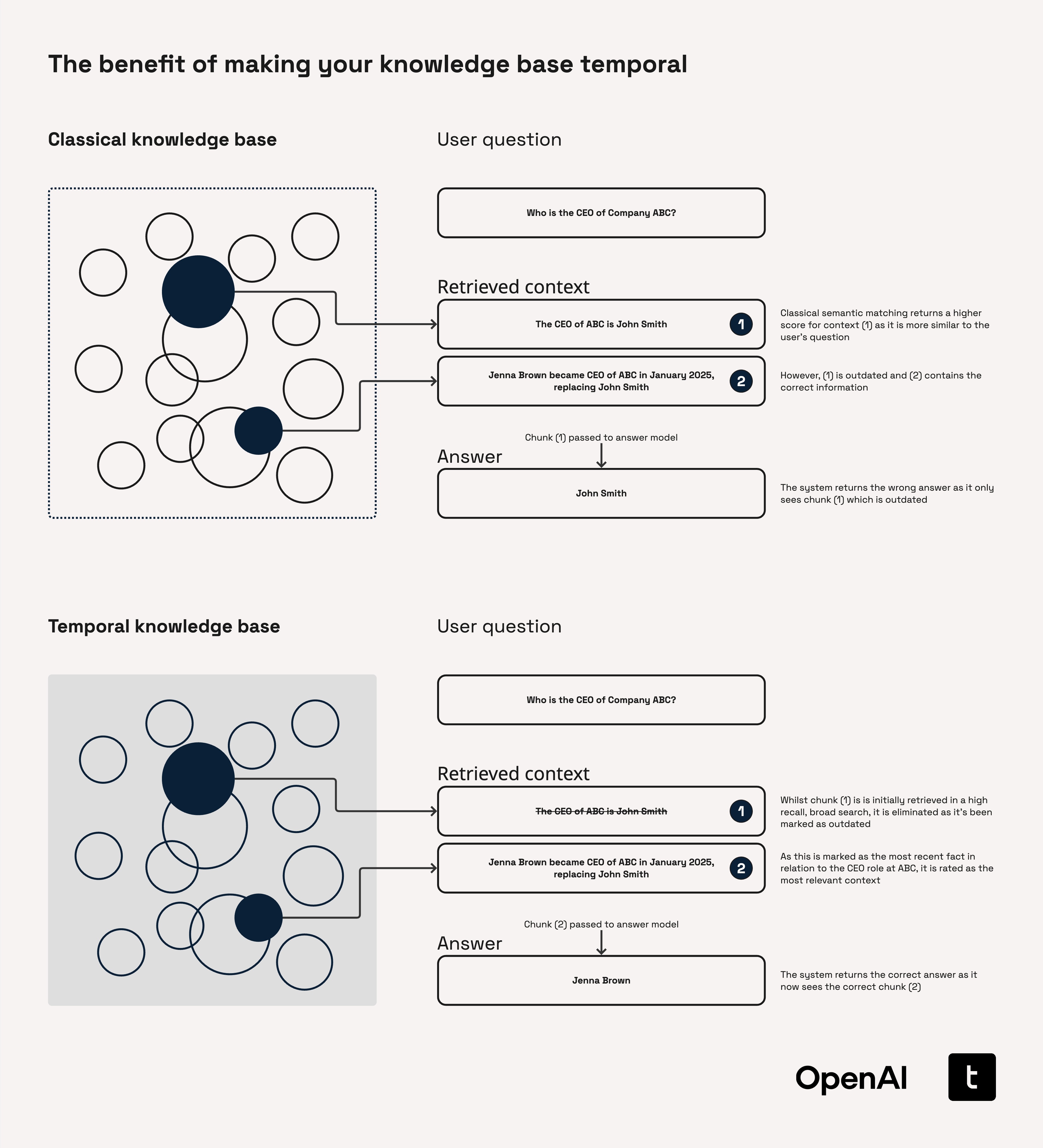

Why make your knowledge graph temporal?

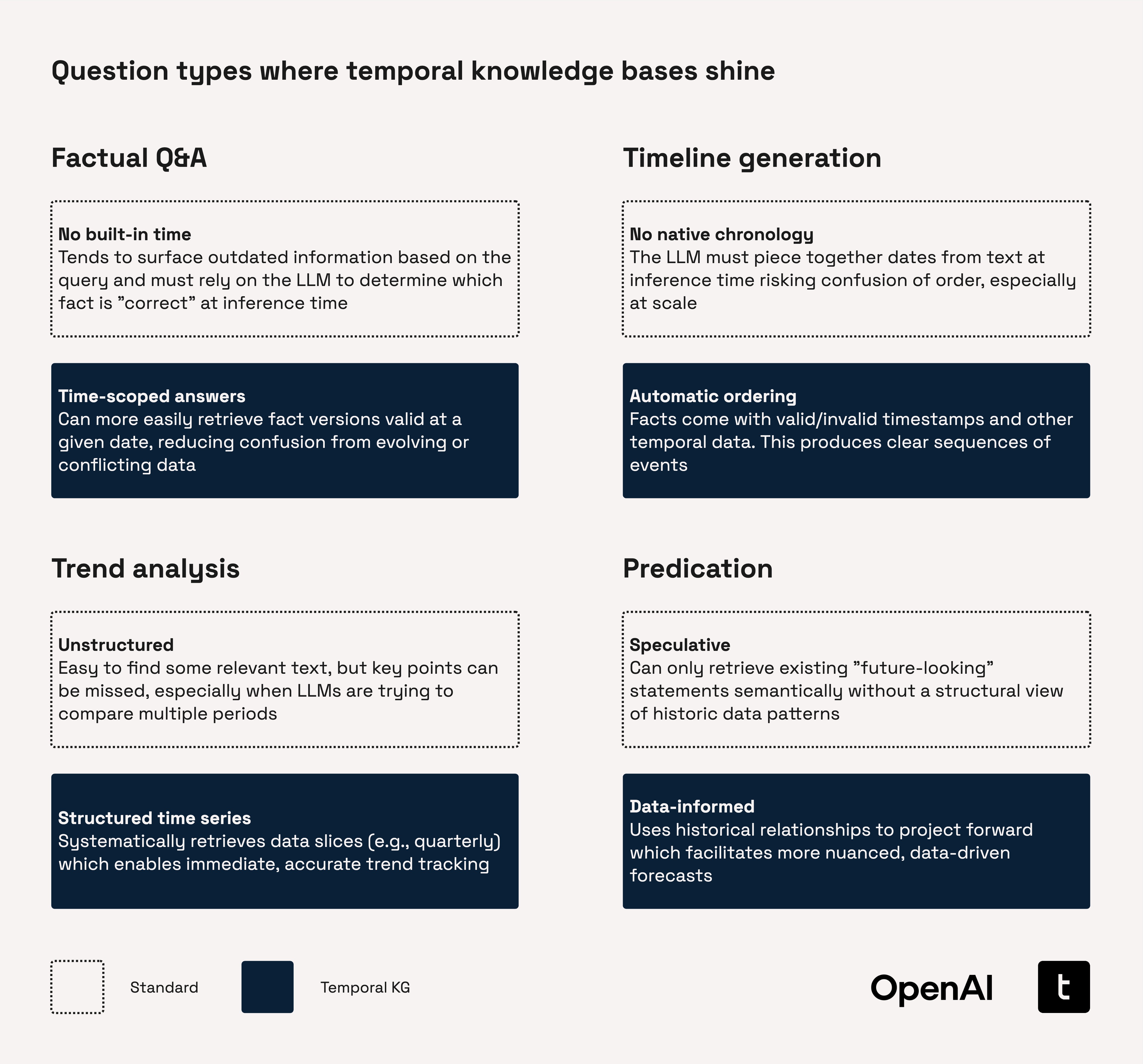

Traditional knowledge graphs treat facts as static, but real-world information evolves constantly. What was true last quarter may be outdated today, risking errors or misinformed decisions if the graph does not capture change over time. Temporal knowledge graphs allow you to precisely answer questions like “What was true on a given date?” or analyse how facts and relationships have shifted, ensuring decisions are always based on the most relevant context.

-

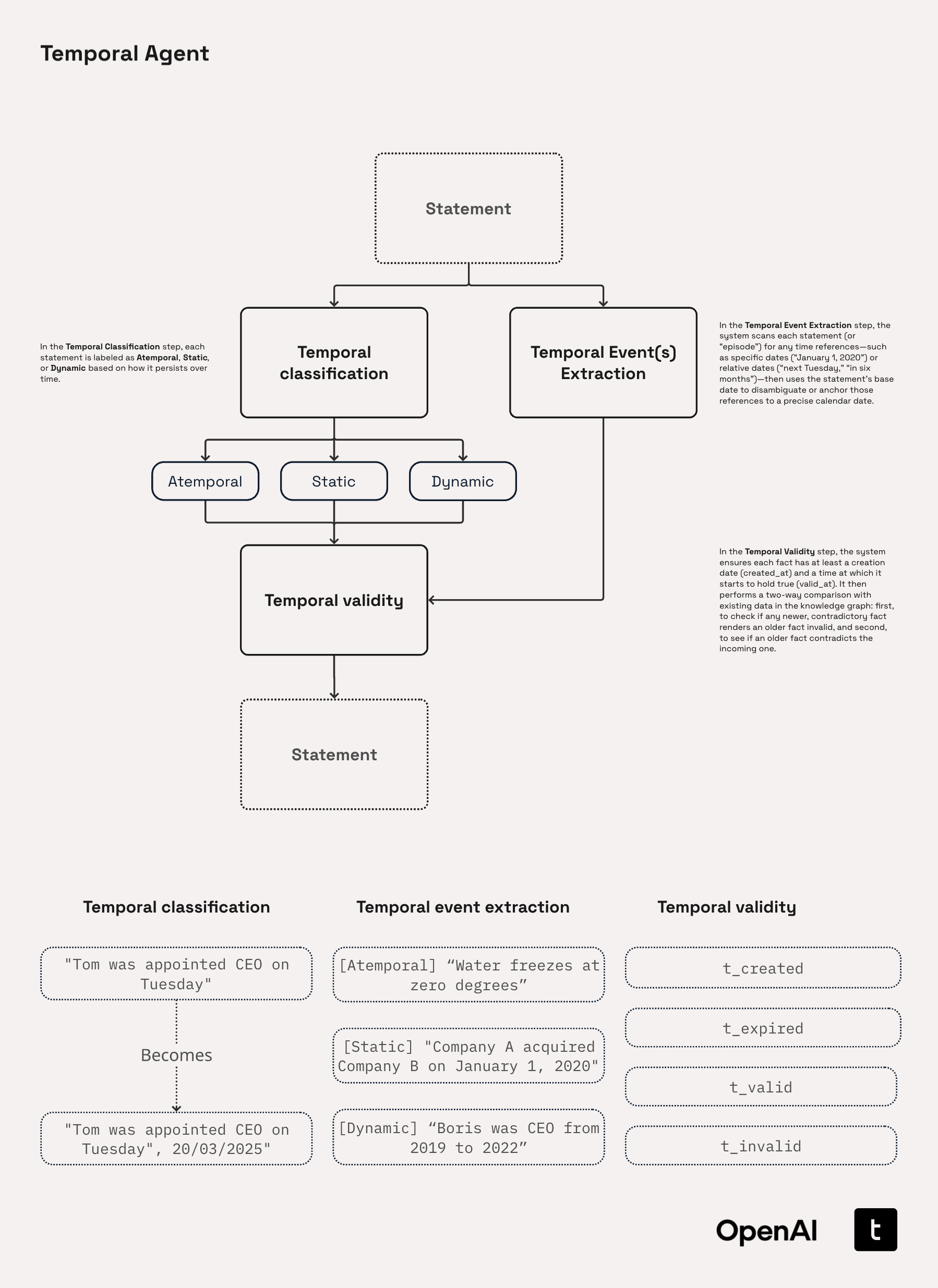

What is a Temporal Agent?

A Temporal Agent is a pipeline component that ingests raw data and produces time-stamped triplets for your knowledge graph. This enables precise time-based querying, timeline construction, trend analysis, and more.

-

How does the pipeline work?

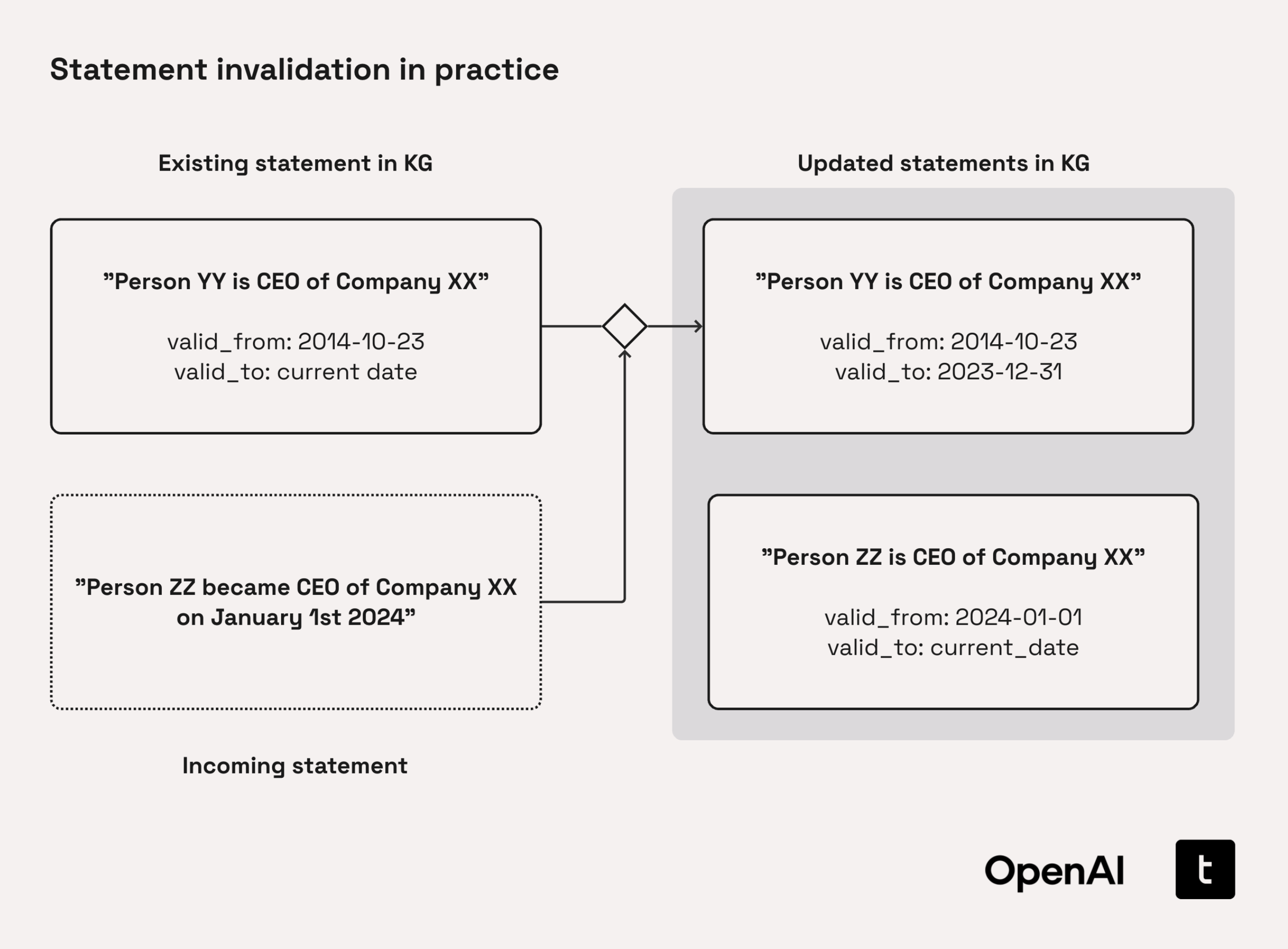

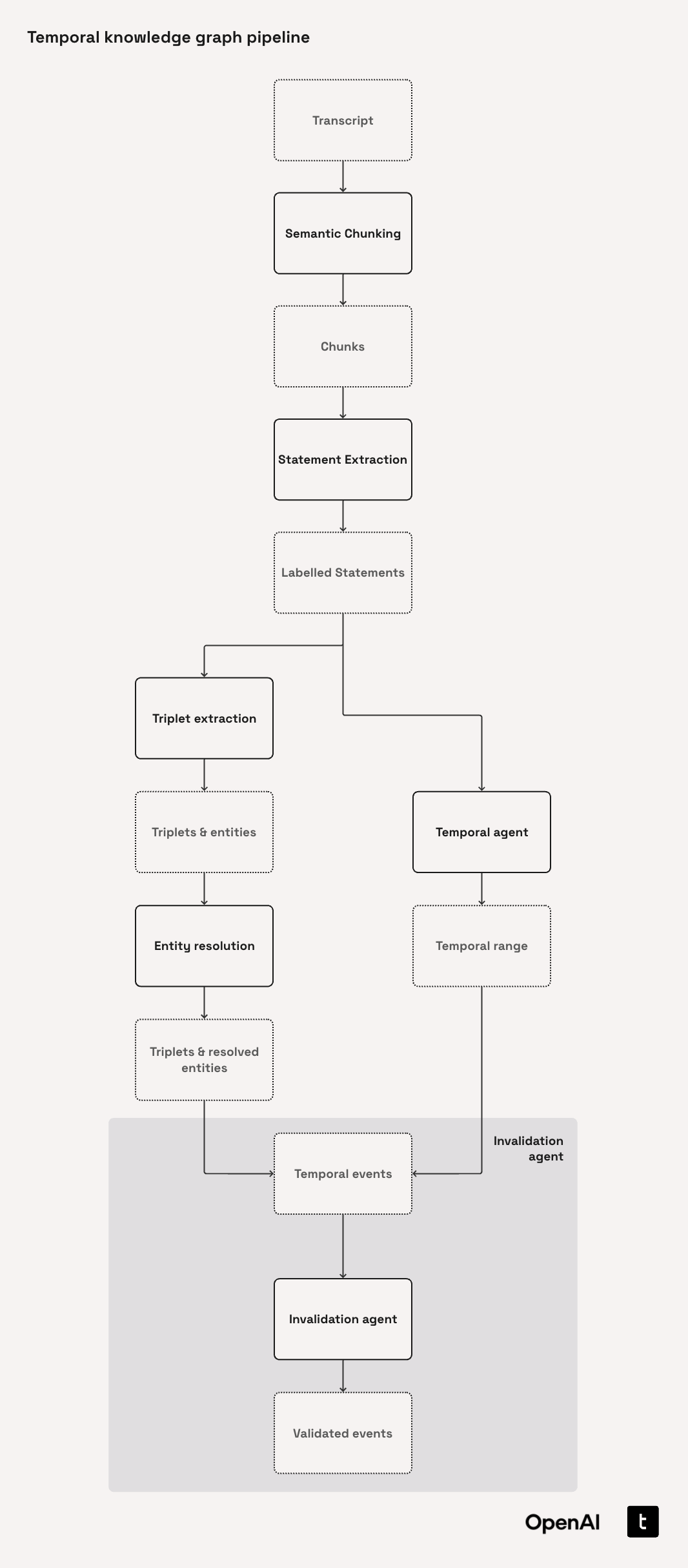

The pipeline starts by semantically chunking your raw documents. These chunks are decomposed into statements ready for our Temporal Agent, which then creates time-aware triplets. An Invalidation Agent can then perform temporal validity checks, spotting and handling any statements that are invalidated by new statements that are incident on the graph.

Multi-Step Retrieval Over a Knowledge Graph

-

Why use multi-step retrieval?

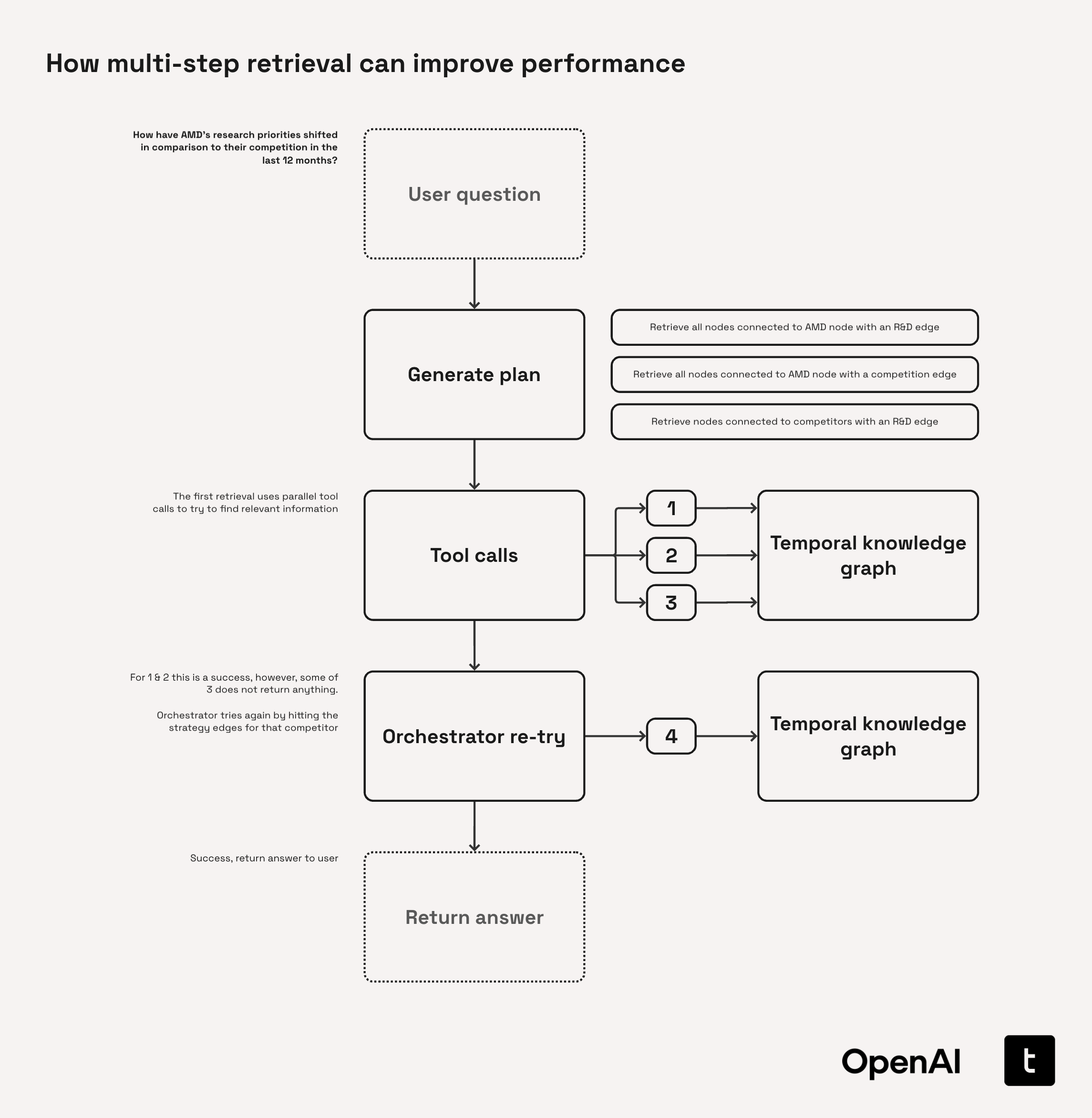

Direct, single-hop queries frequently miss salient facts distributed across a graph's topology. Multi-step (multi-hop) retrieval enables iterative traversal, following relationships and aggregating evidence across several hops. This methodology surfaces complex dependencies and latent connections that would remain hidden with one-shot lookups, providing more comprehensive and nuanced answers to sophisticated queries.

-

Planners

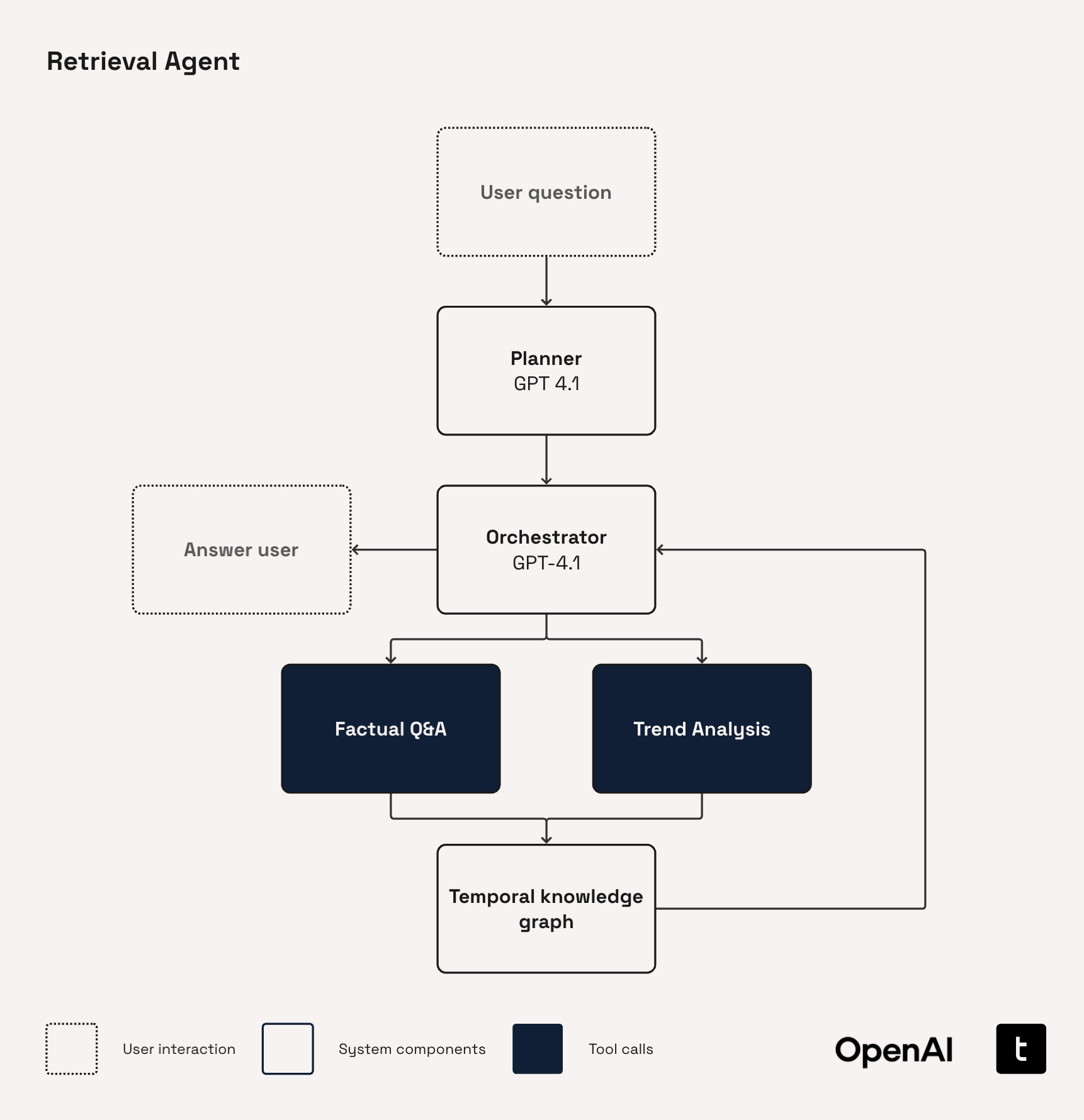

Planners orchestrate the retrieval process. Task-orientated planners decompose queries into concrete, sequential subtasks. Hypothesis-orientated planners, by contrast, propose claims to confirm, refute, or evolve. Choosing the optimal strategy depends on where the problem lies on the spectrum from deterministic reporting (well-defined paths) to exploratory research (open-ended inference).

-

Tool Design Paradigms

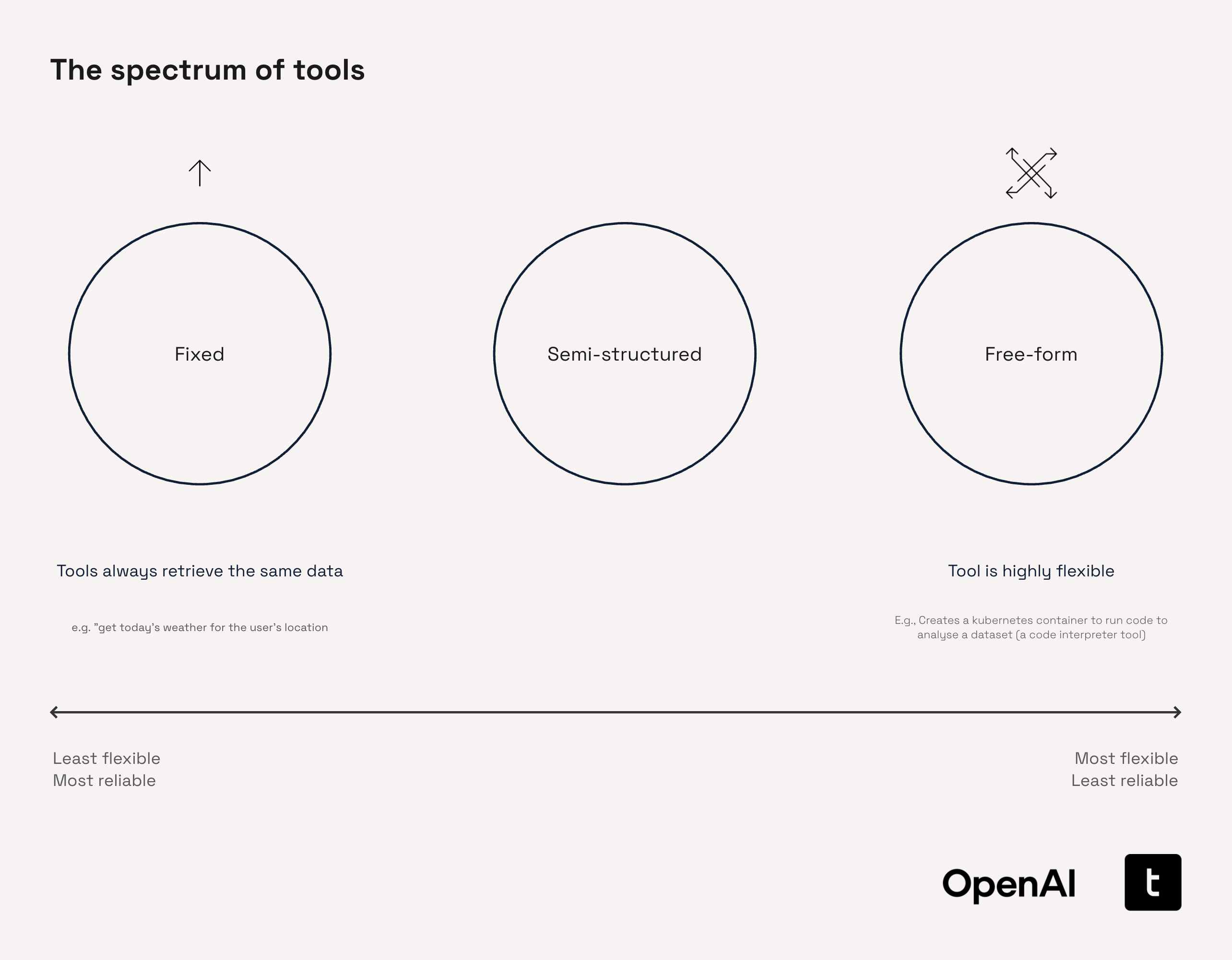

Tool design spans a continuum: Fixed tools provide consistent, predictable outputs for specific queries (e.g., a service that always returns today’s weather for San Francisco). At the other end, Free-form tools offer broad flexibility, such as code execution or open-ended data retrieval. Semi-structured tools fall between these extremes, restricting certain actions while allowing tailored flexibility—specialized sub-agents are a typical example. Selecting the appropriate paradigm is a trade-off between control, adaptability, and complexity.

-

Evaluating Retrieval Systems

High-fidelity evaluation hinges on expert-curated "golden" answers, though these are costly and labor-intensive to produce. Automated judgments, such as those from LLMs or tool traces, can be quickly generated to supplement or pre-screen, but may lack the precision of human evaluation. As your system matures, transition towards leveraging real user feedback to measure and optimize retrieval quality in production.

A proven workflow: Start with synthetic tests, benchmark on your curated human-annotated "golden" dataset, and iteratively refine using live user feedback and ratings.

Prototype to Production

-

Keep the graph lean

Established archival policies and assign numeric relevance scores to each edge (e.g., recency x trust x query-frequency). Automate the archival or sparsification of low-value nodes and edges, ensuring only the most critical and frequently accessed facts remain for rapid retrieval.

-

Parallelize the ingestion pipeline

Transition from a linear document → chunk → extraction → resolution pipeline to a staged, asynchronous architecture. Assign each processing phase its own queue and dedicated worker pool. Apply clustering or network-based batching for invalidation jobs to maximize efficiency. Batch external API requests (e.g., OpenAI) and database writes wherever possible. This design increases throughput, introduces backpressure for reliability, and allows you to scale each pipeline stage independently.

-

Integrate Robust Production Safeguards

Enforce rigorous output validation: standardise temporal fields (e.g., ISO-8601 date formatting), constrain entity types to your controlled vocabulary, and apply lightweight model-based sanity checks for output consistency. Employ structured logging with traceable identifiers and monitor real-time quality and performance metrics in real lime to proactively detect data drift, regressions, or pipeline anomalised before they impact downstream applications.

2. How to Use This Cookbook

This cookbook is designed for flexible engagement:

- Use it as a comprehensive technical guide—read from start to finish for a deep understanding of temporally-aware knowledge graph systems.

- Skim for advanced concepts, methodologies, and implementation patterns if you prefer a high-level overview.

- Jump into any of the three modular sections; each is self-contained and directly applicable to real-world scenarios.

Inside, you'll find:

-

Creating a Temporally-Aware Knowledge Graph with a Temporal Agent

Build a pipeline that extracts entities and relations from unstructured text, resolves temporal conflicts, and keeps your graph up-to-date as new information arrives.

-

Multi-Step Retrieval Over a Knowledge Graph

Use structured queries and language model reasoning to chain multiple hops across your graph and answer complex questions.

-

Prototype to Production

Move from experimentation to deployment. This section covers architectural tips, integration patterns, and considerations for scaling reliably.

2.1. Pre-requisites

Before diving into building temporal agents and knowledge graphs, let's set up your environment. Install all required dependencies with pip, and set your OpenAI API key as an environment variable. Python 3.12 or later is required.

!python -V

%pip install --upgrade pip

%pip install -qU chonkie datetime ipykernel jinja2 matplotlib networkx numpy openai plotly pydantic rapidfuzz scipy tenacity tiktoken pandas

%pip install -q "datasets<3.0"Python 3.12.8 Requirement already satisfied: pip in ./.venv/lib/python3.12/site-packages (25.1.1) Note: you may need to restart the kernel to use updated packages. Note: you may need to restart the kernel to use updated packages. Note: you may need to restart the kernel to use updated packages.

import os

if "OPENAI_API_KEY" not in os.environ:

import getpass

os.environ["OPENAI_API_KEY"] = getpass.getpass("Paste your OpenAI API key here: ")3. Creating a Temporally-Aware Knowledge Graph with a Temporal Agent

Accurate data is the foundation of any good business decision. OpenAI’s latest models like o3, o4-mini, and the GPT-4.1 family are enabling businesses to build state-of-the-art retrieval systems for their most important workflows. However, information evolves rapidly: facts ingested confidently yesterday may already be outdated today.

Without the ability to track when each fact was valid, retrieval systems risk returning answers that are outdated, non-compliant, or misleading. The consequences of missing temporal context can be severe in any industry, as illustrated by the following examples.

| Industry | Example question | Risk if database is not temporal |

|---|---|---|

| Financial Services | "How has Moody’s long‑term rating for Bank YY evolved since Feb 2023?" | Mispricing credit risk by mixing historical & current ratings |

| "Who was the CFO of Retailer ZZ when the FY‑22 guidance was issued?" | Governance/insider‑trading analysis may blame the wrong executive | |

| "Was Fund AA sanctioned under Article BB at the time it bought Stock CC in Jan 2024?" | Compliance report could miss an infraction if rules changed later | |

| Manufacturing / Automotive | "Which ECU firmware was deployed in model Q3 cars shipped between 2022‑05 and 2023‑03?" | Misdiagnosing field failures due to firmware drift |

| "Which robot‑controller software revision ran on Assembly Line 7 during Lot 8421?" | Root‑cause analysis may blame the wrong software revision | |

| "What torque specification applied to steering‑column bolts in builds produced in May 2024?" | Safety recall may miss affected vehicles |

While we've called out some specific examples here, this theme is true across many industries including pharmaceuticals, law, consumer goods, and more.

Looking beyond standard retrieval

A temporally-aware knowledge graph allows you to go beyond static fact lookup. It enables richer retrieval workflows such as factual Q&A grounded in time, timeline generation, change tracking, counterfactual analysis, and more. We dive into these in more detail in our retrieval section later in the cookbook.

3.1. Introducing our Temporal Agent

A temporal agent is a specialized pipeline that converts raw, free-form statements into time-aware triplets ready for ingesting into a knowledge graph that can then be queried with the questions of the character “What was true at time T?”.

Triplets are the basic building blocks of knowledge graphs. It's a way to represent a single fact or piece of knowledge using three parts (hence, "triplet"):

- Subject - the entity you are talking about

- Predicate - the type of relationship or property

- Object - the value or other entity that the subject is connected to

You can thinking of this like a sentence with a structure [Subject] - [Predicate] - [Object]. As a more clear example:

"London" - "isCapitalOf" - "United Kingdom"The Temporal Agent implemented in this cookbook draws inspiration from Zep and Graphiti, while introducing tighter control over fact invalidation and a more nuanced approach to episodic typing.

3.1.1. Key enhancements introduced in this cookbook

-

Temporal validity extraction

Builds on Graphiti's prompt design to identify temporal spans and episodic context without requiring auxiliary reference statements.

-

Fact invalidation logic

Introduces bidirectionality checks and constrains comparisons by episodic type. This retains Zep's non-lossy approach while reducing unnecessary evaluations.

-

Temporal & episodic typing

Differentiates between

Fact,Opinion,Prediction, as well as between temporal classesStatic,Dynamic,Atemporal. -

Multi‑event extraction

Handles compound sentences and nested date references in a single pass.

This process allows us to update our sources of truth efficiently and reliably:

Note: While the implementation in this cookbook is focused on a graph-based implementation, this approach is generalizable to other knowledge base structures e.g., pgvector-based systems.

3.1.2. The Temporal Agent Pipeline

The Temporal Agent processes incoming statements through a three-stage pipeline:

-

Temporal Classification

Labels each statement as Atemporal, Static, or Dynamic:

- Atemporal statements never change (e.g., “The speed of light in a vaccuum is ≈3×10⁸ m s⁻¹”).

- Static statements are valid from a point in time but do not change afterwards (e.g., "Person YY was CEO of Company XX on October 23rd 2014.").

- Dynamic statements evolve (e.g., "Person YY is CEO of Company XX.").

-

Temporal Event Extraction

Identifies relative or partial dates (e.g., “Tuesday”, “three months ago”) and resolves them to an absolute date using the document timestamp or fallback heuristics (e.g., default to the 1st or last of the month if only the month is known).

-

Temporal Validity Check

Ensures every statement includes a

t_createdtimestamp and, when applicable, at_expiredtimestamp. The agent then compares the candidate triplet to existing knowledge graph entries to:- Detect contradictions and mark outdated entries with

t_invalid - Link newer statements to those they invalidate with

invalidated_by

- Detect contradictions and mark outdated entries with

3.1.3. Selecting the right model for a Temporal Agent

When building systems with LLMs, it is a good practice to start with larger models then later look to optimize and shrink.

The GPT-4.1 series is particularly well-suited for building Temporal Agents due to its strong instruction-following ability. On benchmarks like Scale’s MultiChallenge, GPT-4.1 outperforms GPT-4o by $10.5%_{abs}$, demonstrating superior ability to maintain context, reason in-conversation, and adhere to instructions - key traits for extracting time-stamped triplets. These capabilities make it an excellent choice for prototyping agents that rely on time-aware data extraction.

Recommended development workflow

-

Prototype with GPT-4.1

Maximize correctness and reduce prompt-debug time while you build out the core pipeline logic.

-

Swap to GPT-4.1-mini or GPT-4.1-nano

Once prompts and logic are stable, switch to smaller variants for lower latency and cost-effective inference.

-

Distill onto GPT-4.1-mini or GPT-4.1-nano

Use OpenAI's Model Distillation to train smaller models with high-quality outputs from a larger 'teacher' model such as GPT-4.1, preserving (or even improving) performance relative to GPT-4.1.

| Model | Relative cost | Relative latency | Intelligence | Ideal Role in Workflow |

|---|---|---|---|---|

| GPT-4.1 | ★★★ | ★★ | ★★★ (highest) | Ground-truth prototyping, generating data for distillation |

| GPT-4.1-mini | ★★ | ★ | ★★ | Balanced cost-performance, mid to large scale production systems |

| GPT-4.1-nano | ★ (lowest) | ★ (fastest) | ★ | Cost-sensitive and ultra-large scale bulk processing |

In practice, this looks like: prototype with GPT-4.1 → measure quality → step down the ladder until the trade-offs no longer meet your needs.

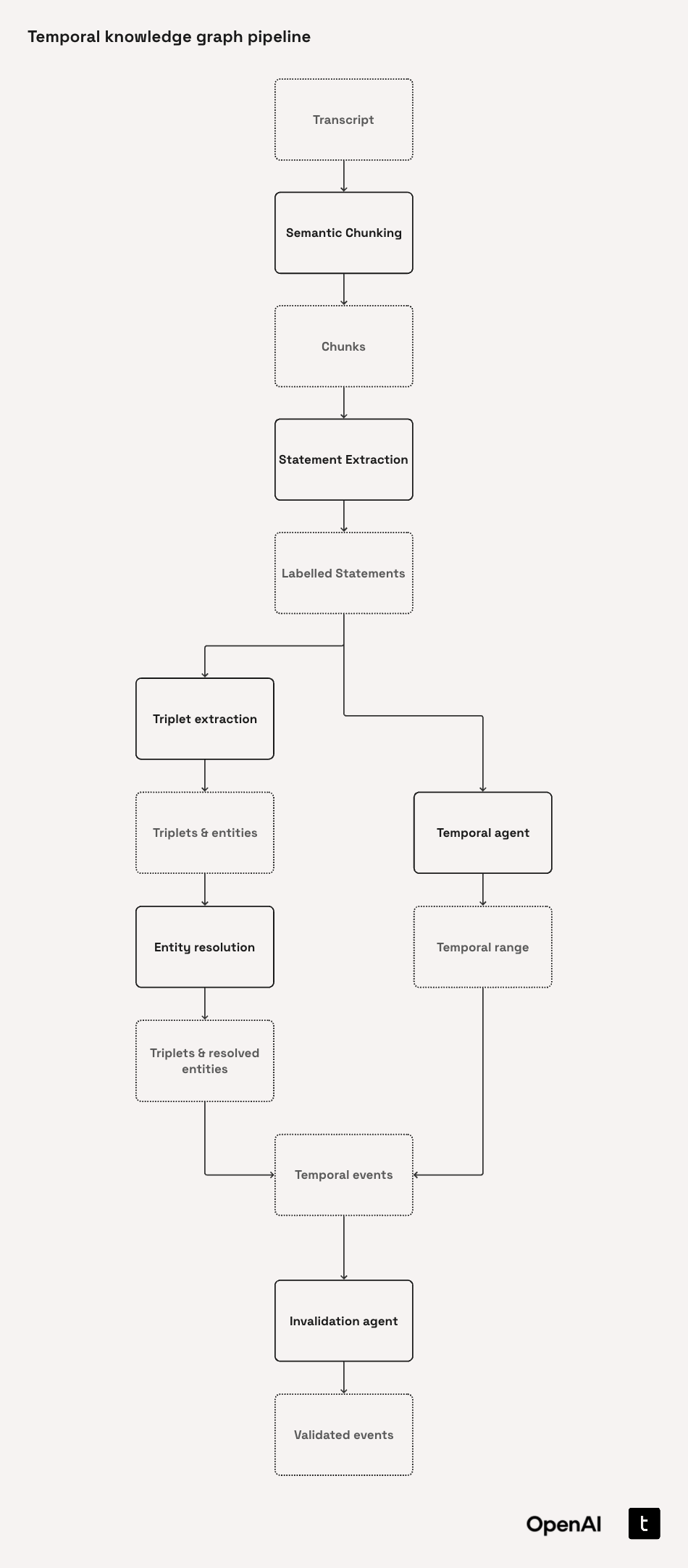

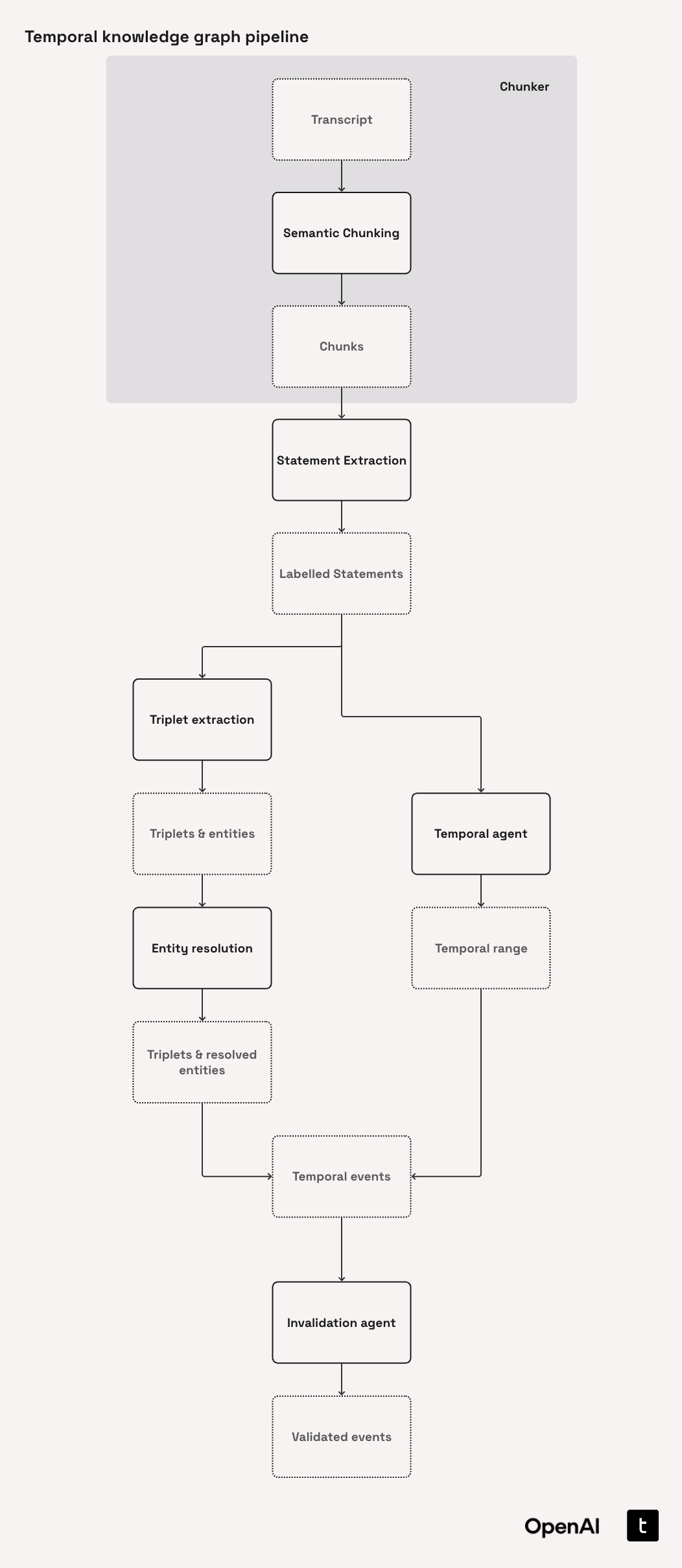

3.2. Building our Temporal Agent Pipeline

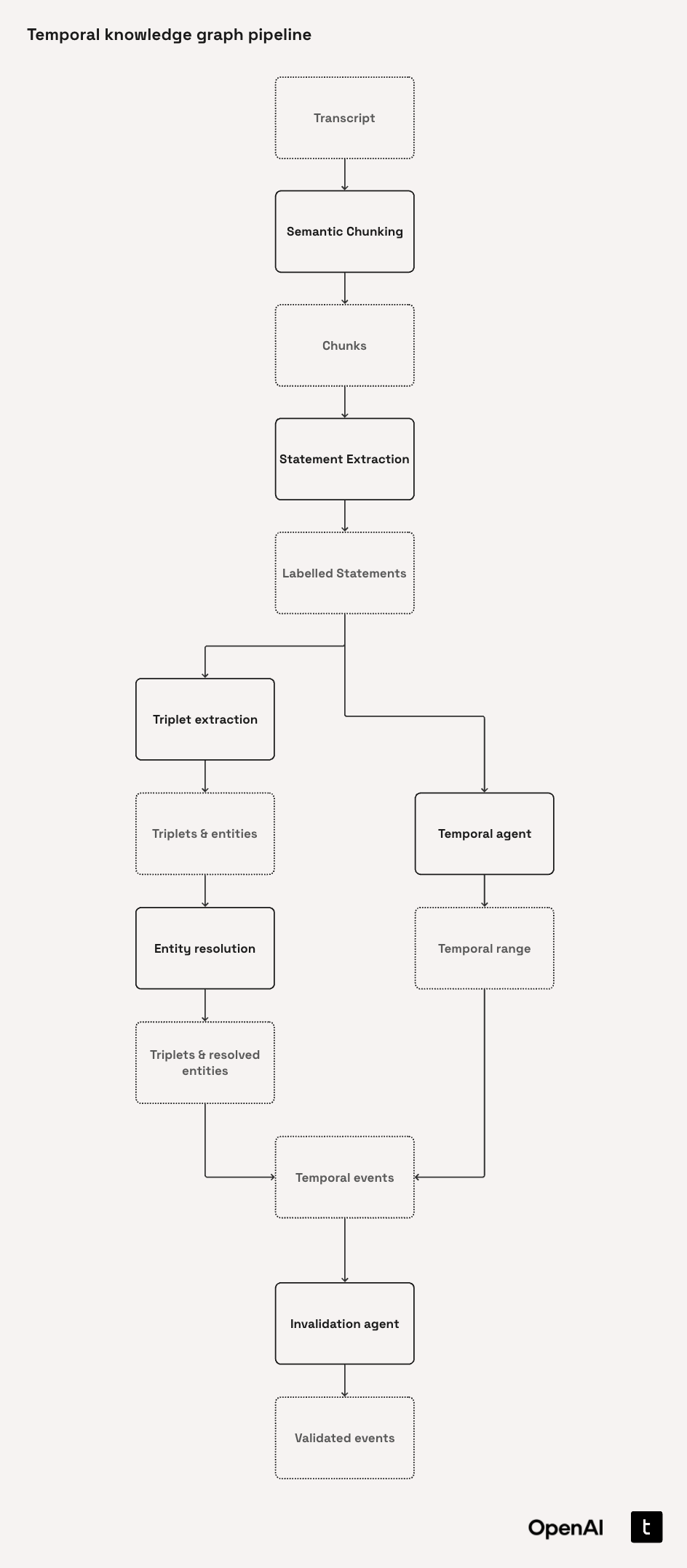

Before diving into the implementation details, it's useful to understand the ingestion pipeline at a high level:

-

Load transcripts

-

Creating a Semantic Chunker

-

Laying the Foundations for our Temporal Agent

-

Statement Extraction

-

Temporal Range Extraction

-

Creating our Triplets

-

Temporal Events

-

Defining our Temporal Agent

-

Entity Resolution

-

Invalidation Agent

-

Building our pipeline

Architecture diagram

3.2.1. Load transcripts

For the purposes of this cookbook, we have selected the "Earnings Calls Dataset" (jlh-ibm/earnings_call) which is made available under the Creative Commons Zero v1.0 license. This dataset contains a collection of 188 earnings call transcripts originating in the period 2016-2020 in relation to the NASDAQ stock market. We believe this dataset is a good choice for this cookbook as extracting information from - and subsequently querying information from - earnings call transcripts is a common problem in many financial institutions around the world.

Moreover, the often variable character of statements and topics from the same company across multiple earnings calls provides a useful vector through which to demonstrate the temporal knowledge graph concept.

Despite this dataset's focus on the financial world, we build up the Temporal Agent in a general structure, so it will be quick to adapt to similar problems in other industries such as pharmaceuticals, law, automotive, and more.

For the purposes of this cookbook we are limiting the processing to two companies - AMD and Nvidia - though in practice this pipeline can easily be scaled to any company.

Let’s start by loading the dataset from HuggingFace.

from datasets import load_dataset

hf_dataset_name = "jlh-ibm/earnings_call"

subset_options = ["stock_prices", "transcript-sentiment", "transcripts"]

hf_dataset = load_dataset(hf_dataset_name, subset_options[2])

my_dataset = hf_dataset["train"]my_datasetDataset({

features: ['company', 'date', 'transcript'],

num_rows: 150

})row = my_dataset[0]

row["company"], row["date"], row["transcript"][:200]from collections import Counter

company_counts = Counter(my_dataset["company"])

company_countsDatabase Set-up

Before we get to processing this data, let’s set up our database.

For convenience within a notebook format, we've chosen SQLite as our database for this implementation. In the "Prototype to Production" section, and in Appendix section A.1 "Storing and Retrieving High-Volume Graph Data" we go into more detail of considerations around different dataset choices in a production environment.

If you are running this cookbook locally, you may chose to set memory = False to save the database to storage, the default file path my_database.db will be used to store your database or you may pass your own db_path arg into make_connection.

We will set up several tables to store the following information:

- Transcripts

- Chunks

- Temporal Events

- Triplets

- Entities (including canonical mappings)

This code is abstracted behind a make_connection method which creates the new SQLite database. The details of this method can be found in the db_interface.py script in the GitHub repository for this cookbook.

from db_interface import make_connection

sqlite_conn = make_connection(memory=False, refresh=True)3.2.2. Creating a Semantic Chunker

Before diving into buidling the Chunker class itself, we begin by defining our first data models. As is generally considered good practice when working with Python, Pydantic is used to ensure type safety and clarity in our model definitions. Pydantic provides a clean, declarative way to define data structures whilst automatically validating and parsing input data, making our data models both robust and easy to work with.

Chunk model

This is a core data model that we'll use to store individual segments of text extracted from transcripts, along with any associated metadata. As we process the transcripts by breaking them into semantically meaningful chunks, each piece will be saved as a separate Chunk.

Each Chunk contains:

id: A unique identifier automatically generated for each chunk. This helps us identify and track chunks of text throughouttext: A string field that contains the text content of the chunkmetadata: A dictionary to allow for flexible metadata storage

import uuid

from typing import Any

from pydantic import BaseModel, Field

class Chunk(BaseModel):

"""A chunk of text from an earnings call."""

id: uuid.UUID = Field(default_factory=uuid.uuid4)

text: str

metadata: dict[str, Any]Transcript model

As the name suggests, we will use the Transcript model to represent the full content of an earnings call transcript. It captures several key pieces of information:

id: Analogous toChunk, this gives us a unique identifiertext: The full text of the transcriptcompany: The name of the company that the earnings call was aboutdate: The date of the earnings callquarter: The fiscal quarter that the earnings call was inchunks: A list ofChunkobjects, each representing a meaningful segment of the full transcript

To ensure the date field is handled correctly, the to_datetime validator is used to convert the value to datetime format.

from datetime import datetime

from pydantic import field_validator

class Transcript(BaseModel):

"""A transcript of a company earnings call."""

id: uuid.UUID = Field(default_factory=uuid.uuid4)

text: str

company: str

date: datetime

quarter: str | None = None

chunks: list[Chunk] | None = None

@field_validator("date", mode="before")

@classmethod

def to_datetime(cls, d: Any) -> datetime:

"""Convert input to a datetime object."""

if isinstance(d, datetime):

return d

if hasattr(d, "isoformat"):

return datetime.fromisoformat(d.isoformat())

return datetime.fromisoformat(str(d))Chunker class

Now, we define the Chunker class to split each transcript into semantically meaningful chunks. Instead of relying on arbitrary rules like character count or line break, we apply semantic chunking to preserve more of the contextual integrity of the original transcript. This ensures that each chunk is a self-contained unit that keeps contextually linked ideas together. This is particularly helpful for downstream tasks like statement extraction, where context heavily influences accuracy.

The chunker class contains two methods:

-

find_quarterThis method attempts to extract the fiscal quarter (e.g., "Q1 2023") directly from the transcript text using a simple regular expression. In this case, this is straightforward as the data format of quarters in the transcripts is consistent and well defined.

However, in real world scenarios, detecting the quarter reliably may require more work. Across multiple sources or document types the detailing of the quarter is likely to be different. LLMs are great tools to help alleviate this issue. Try using GPT-4.1-mini with a prompt specifically to extract the quarter given wider context from the document.

-

generate_transcripts_and_chunksThis is the core method that takes in a dataset (as an iterable of dictionaries) and returns a list of

Transcriptobjects each populated with semantically derivedChunks. It performs the following steps:- Transcript creation: Initializes

Transcriptobjects using the provided text, company, and date fields - Filtering: Uses the

SemanticChunkerfrom chonkie along with OpenAI's text-embedding-3-small model to split the transcript into logical segments - Chunk assignment: Wraps each semantic segment into a

Chunkmodel, attaching relevant metadata like start and end indices

- Transcript creation: Initializes

The chunker falls in to this part of our pipeline:

import re

from concurrent.futures import ThreadPoolExecutor, as_completed

from typing import Any

from chonkie import OpenAIEmbeddings, SemanticChunker

from tqdm import tqdm

class Chunker:

"""

Takes in transcripts of earnings calls and extracts quarter information and splits

the transcript into semantically meaningful chunks using embedding-based similarity.

"""

def __init__(self, model: str = "text-embedding-3-small"):

self.model = model

def find_quarter(self, text: str) -> str | None:

"""Extract the quarter (e.g., 'Q1 2023') from the input text if present, otherwise return None."""

# In this dataset we can just use regex to find the quarter as it is consistently defined

search_results = re.findall(r"[Q]\d\s\d{4}", text)

if search_results:

quarter = str(search_results[0])

return quarter

return None

def generate_transcripts_and_chunks(

self,

dataset: Any,

company: list[str] | None = None,

text_key: str = "transcript",

company_key: str = "company",

date_key: str = "date",

threshold_value: float = 0.7,

min_sentences: int = 3,

num_workers: int = 50,

) -> list[Transcript]:

"""Populate Transcript objects with semantic chunks."""

# Populate the Transcript objects with the passed data on the transcripts

transcripts = [

Transcript(

text=d[text_key],

company=d[company_key],

date=d[date_key],

quarter=self.find_quarter(d[text_key]),

)

for d in dataset

]

if company:

transcripts = [t for t in transcripts if t.company in company]

def _process(t: Transcript) -> Transcript:

if not hasattr(_process, "chunker"):

embed_model = OpenAIEmbeddings(self.model)

_process.chunker = SemanticChunker(

embedding_model=embed_model,

threshold=threshold_value,

min_sentences=max(min_sentences, 1),

)

semantic_chunks = _process.chunker.chunk(t.text)

t.chunks = [

Chunk(

text=c.text,

metadata={

"start_index": getattr(c, "start_index", None),

"end_index": getattr(c, "end_index", None),

},

)

for c in semantic_chunks

]

return t

# Create the semantic chunks and add them to their respective Transcript object using a thread pool

with ThreadPoolExecutor(max_workers=num_workers) as pool:

futures = [pool.submit(_process, t) for t in transcripts]

transcripts = [

f.result()

for f in tqdm(

as_completed(futures),

total=len(futures),

desc="Generating Semantic Chunks",

)

]

return transcripts

raw_data = list(my_dataset)

chunker = Chunker()

transcripts = chunker.generate_transcripts_and_chunks(raw_data)Alternatively, we can load just the AMD and NVDA pre-chunked transcripts from pre-processed files in transcripts/

import pickle

from pathlib import Path

def load_transcripts_from_pickle(directory_path: str = "transcripts/") -> list[Transcript]:

"""Load all pickle files from a directory into a dictionary."""

loaded_transcripts = []

dir_path = Path(directory_path).resolve()

for pkl_file in sorted(dir_path.glob("*.pkl")):

try:

with open(pkl_file, "rb") as f:

transcript = pickle.load(f)

# Ensure it's a Transcript object

if not isinstance(transcript, Transcript):

transcript = Transcript(**transcript)

loaded_transcripts.append(transcript)

print(f"✅ Loaded transcript from {pkl_file.name}")

except Exception as e:

print(f"❌ Error loading {pkl_file.name}: {e}")

return loaded_transcripts# transcripts = load_transcripts_from_pickle()Now we can inspect a couple of chunks:

chunks = transcripts[0].chunks

if chunks is not None:

for i, chunk in enumerate(chunks[21:23]):

print(f"Chunk {i+21}:")

print(f" ID: {chunk.id}")

print(f" Text: {repr(chunk.text[:200])}{'...' if len(chunk.text) > 100 else ''}")

print(f" Metadata: {chunk.metadata}")

print()

else:

print("No chunks found for the first transcript.")With this, we have successfully split our transcripts into semantically sectioned chunks. We can now move onto the next steps in our pipeline.

3.2.3. Laying the Foundations for our Temporal Agent

Before we move onto defining the TemporalAgent class, we will first define the prompts and data models that are needed for it to function.

Formalizing our label definitions

For our temporal agent to be able to accurately extract the statement and temporal types we need to provide it with sufficiently detailed and specific context. For convenience, we define these within a structured format below.

Each label contains three crucial pieces of information that we will later pass to our LLMs in prompts.

-

definition

Provides a concise description of what the label represents. It establishes the conceptual boundaries of the statement or temporal type and ensures consistency in interpretation across examples.

-

date_handling_guidance

Explains how to interpret the temporal validity of a statement associated with the label. It describes how the

valid_atandinvalid_atdates should be derived when processing instances of that label. -

date_handling_examples

Includes illustrative examples of how real-world statements would be labelled and temporally annotated under this label. These will be used as few-shot examples to the LLMs downstream.

LABEL_DEFINITIONS: dict[str, dict[str, dict[str, str]]] = {

"episode_labelling": {

"FACT": dict(

definition=(

"Statements that are objective and can be independently "

"verified or falsified through evidence."

),

date_handling_guidance=(

"These statements can be made up of multiple static and "

"dynamic temporal events marking for example the start, end, "

"and duration of the fact described statement."

),

date_handling_example=(

"'Company A owns Company B in 2022', 'X caused Y to happen', "

"or 'John said X at Event' are verifiable facts which currently "

"hold true unless we have a contradictory fact."

),

),

"OPINION": dict(

definition=(

"Statements that contain personal opinions, feelings, values, "

"or judgments that are not independently verifiable. It also "

"includes hypothetical and speculative statements."

),

date_handling_guidance=(

"This statement is always static. It is a record of the date the "

"opinion was made."

),

date_handling_example=(

"'I like Company A's strategy', 'X may have caused Y to happen', "

"or 'The event felt like X' are opinions and down to the reporters "

"interpretation."

),

),

"PREDICTION": dict(

definition=(

"Uncertain statements about the future on something that might happen, "

"a hypothetical outcome, unverified claims. It includes interpretations "

"and suggestions. If the tense of the statement changed, the statement "

"would then become a fact."

),

date_handling_guidance=(

"This statement is always static. It is a record of the date the "

"prediction was made."

),

date_handling_example=(

"'It is rumoured that Dave will resign next month', 'Company A expects "

"X to happen', or 'X suggests Y' are all predictions."

),

),

},

"temporal_labelling": {

"STATIC": dict(

definition=(

"Often past tense, think -ed verbs, describing single points-in-time. "

"These statements are valid from the day they occurred and are never "

"invalid. Refer to single points in time at which an event occurred, "

"the fact X occurred on that date will always hold true."

),

date_handling_guidance=(

"The valid_at date is the date the event occurred. The invalid_at date "

"is None."

),

date_handling_example=(

"'John was appointed CEO on 4th Jan 2024', 'Company A reported X percent "

"growth from last FY', or 'X resulted in Y to happen' are valid the day "

"they occurred and are never invalid."

),

),

"DYNAMIC": dict(

definition=(

"Often present tense, think -ing verbs, describing a period of time. "

"These statements are valid for a specific period of time and are usually "

"invalidated by a Static fact marking the end of the event or start of a "

"contradictory new one. The statement could already be referring to a "

"discrete time period (invalid) or may be an ongoing relationship (not yet "

"invalid)."

),

date_handling_guidance=(

"The valid_at date is the date the event started. The invalid_at date is "

"the date the event or relationship ended, for ongoing events this is None."

),

date_handling_example=(

"'John is the CEO', 'Company A remains a market leader', or 'X is continuously "

"causing Y to decrease' are valid from when the event started and are invalidated "

"by a new event."

),

),

"ATEMPORAL": dict(

definition=(

"Statements that will always hold true regardless of time therefore have no "

"temporal bounds."

),

date_handling_guidance=(

"These statements are assumed to be atemporal and have no temporal bounds. Both "

"their valid_at and invalid_at are None."

),

date_handling_example=(

"'A stock represents a unit of ownership in a company', 'The earth is round', or "

"'Europe is a continent'. These statements are true regardless of time."

),

),

},

}

3.2.4. Statement Extraction

"Statement Extraction" refers to the process of splitting our semantic chunks into the smallest possible "atomic" facts. Within our Temporal Agent, this is achieved by:

-

Finding every standalone, declarative claim

Extract statements that can stand on their own as complete subject-predicate-object expressions without relying on surrounding context.

-

Ensuring atomicity

Break down complex or compound sentences into minimal, indivisible factual units, each expressing a single relationship.

-

Resolving references

Replace pronouns or abstract references (e.g., "he" or "The Company") with specific entities (e.g., "John Smith", "AMD") using the main subject for disambiguation.

-

Preserving temporal and quantitative precision

Retain explicit dates, durations, and quantities to anchor each fact precisely in time and scale.

-

Labelling each extracted statement

Every statement is annotated with a

StatementTypeand aTemporalType.

Temporal Types

The TemporalType enum provides a standardized set of temporal categories that make it easier to classify and work with statements extracted from earnings call transcripts.

Each category captures a different kind of temporal reference:

- Atemporal: Statements that are universally true and invariant over time (e.g., “The speed of light in a vacuum is ≈3×10⁸ m s⁻¹.”).

- Static: Statements that became true at a specific point in time and remain unchanged thereafter (e.g., “Person YY was CEO of Company XX on October 23rd, 2014.”).

- Dynamic: Statements that may change over time and require temporal context to interpret accurately (e.g., “Person YY is CEO of Company XX.”).

from enum import StrEnum

class TemporalType(StrEnum):

"""Enumeration of temporal types of statements."""

ATEMPORAL = "ATEMPORAL"

STATIC = "STATIC"

DYNAMIC = "DYNAMIC"Statement Types

Similarly, the StatementType enum classifies the nature of each extracted statement, capturing its epistemic characteristics.

- Fact: A statement that asserts a verifiable claim considered true at the time it was made. However, it may later be superseded or contradicted by other facts (e.g., updated information or corrections).

- Opinion: A subjective statement reflecting a speaker’s belief, sentiment, or judgment. By nature, opinions are considered temporally true at the moment they are expressed.

- Prediction: A forward-looking or hypothetical statement about a potential future event or outcome. Temporally, a prediction is assumed to hold true from the time of utterance until the conclusion of the inferred prediction window.

class StatementType(StrEnum):

"""Enumeration of statement types for statements."""

FACT = "FACT"

OPINION = "OPINION"

PREDICTION = "PREDICTION"Raw Statement

The RawStatement model represents an individual statement extracted by an LLM, annotated with both its semantic type (StatementType) and temporal classification (TemporalType). These raw statements serve as intermediate representations and are intended to be transformed into TemporalEvent objects in later processing stages.

Core fields:

statement: The textual content of the extracted statementstatement_type: The type of statement (Fact, Opinion, Prediction), based on theStatementTypeenumtemporal_type: The temporal classification of the statement (Static, Dynamic, Atemporal), drawn from theTemporalTypeenum

The model includes field-level validators to ensure that all type annotations conform to their respective enums, providing a layer of robustness against invalid input.

The companion model RawStatementList contains the output of the statement extraction step: a list of RawStatement instances.

from pydantic import field_validator

class RawStatement(BaseModel):

"""Model representing a raw statement with type and temporal information."""

statement: str

statement_type: StatementType

temporal_type: TemporalType

@field_validator("temporal_type", mode="before")

@classmethod

def _parse_temporal_label(cls, value: str | None) -> TemporalType:

if value is None:

return TemporalType.ATEMPORAL

cleaned_value = value.strip().upper()

try:

return TemporalType(cleaned_value)

except ValueError as e:

raise ValueError(f"Invalid temporal type: {value}. Must be one of {[t.value for t in TemporalType]}") from e

@field_validator("statement_type", mode="before")

@classmethod

def _parse_statement_label(cls, value: str | None = None) -> StatementType:

if value is None:

return StatementType.FACT

cleaned_value = value.strip().upper()

try:

return StatementType(cleaned_value)

except ValueError as e:

raise ValueError(f"Invalid temporal type: {value}. Must be one of {[t.value for t in StatementType]}") from e

class RawStatementList(BaseModel):

"""Model representing a list of raw statements."""

statements: list[RawStatement]Statement Extraction Prompt

This is the core prompt that powers our Temporal Agent's ability to extract and label atomic statements. It is written in Jinja allowing us to modularly compose dynamic inputs without rewriting the core logic.

Anatomy of the prompt

-

Set up the extraction task

We instruct the assistant to behave like a domain expert in finance and clearly define the two subtasks: (i) extracting atomic, declarative statements, and (ii) labelling each with a

statement_typeand atemporal_type. -

Enforces strict extraction guidelines

The rules for extraction help to enforce consistency and clarity. Statements must:

- Be structured as clean subject-predicate-object triplets

- Be self-contained and context-independent

- Resolve co-references (e.g., "he" → "John Smith")

- Include temporal/quantitative qualifiers where present

- Be split when multiple events or temporalities are described

-

Supports plug-and-play definitions

The

{% if definitions %}block makes it easy to inject structured definitions such as statement categories, temporal types, and domain-specific terms. -

Includes few-shot examples

We provide an annotated example chunk and the corresponding JSON output to demonstrate to the model how it should behave.

statement_extraction_prompt = '''

{% macro tidy(name) -%}

{{ name.replace('_', ' ')}}

{%- endmacro %}

You are an expert finance professional and information-extraction assistant.

===Inputs===

{% if inputs %}

{% for key, val in inputs.items() %}

- {{ key }}: {{val}}

{% endfor %}

{% endif %}

===Tasks===

1. Identify and extract atomic declarative statements from the chunk given the extraction guidelines

2. Label these (1) as Fact, Opinion, or Prediction and (2) temporally as Static or Dynamic

===Extraction Guidelines===

- Structure statements to clearly show subject-predicate-object relationships

- Each statement should express a single, complete relationship (it is better to have multiple smaller statements to achieve this)

- Avoid complex or compound predicates that combine multiple relationships

- Must be understandable without requiring context of the entire document

- Should be minimally modified from the original text

- Must be understandable without requiring context of the entire document,

- resolve co-references and pronouns to extract complete statements, if in doubt use main_entity for example:

"your nearest competitor" -> "main_entity's nearest competitor"

- There should be no reference to abstract entities such as 'the company', resolve to the actual entity name.

- expand abbreviations and acronyms to their full form

- Statements are associated with a single temporal event or relationship

- Include any explicit dates, times, or quantitative qualifiers that make the fact precise

- If a statement refers to more than 1 temporal event, it should be broken into multiple statements describing the different temporalities of the event.

- If there is a static and dynamic version of a relationship described, both versions should be extracted

{%- if definitions %}

{%- for section_key, section_dict in definitions.items() %}

==== {{ tidy(section_key) | upper }} DEFINITIONS & GUIDANCE ====

{%- for category, details in section_dict.items() %}

{{ loop.index }}. {{ category }}

- Definition: {{ details.get("definition", "") }}

{% endfor -%}

{% endfor -%}

{% endif -%}

===Examples===

Example Chunk: """

TechNova Q1 Transcript (Edited Version)

Attendees:

* Matt Taylor

ABC Ltd - Analyst

* Taylor Morgan

BigBank Senior - Coordinator

----

On April 1st, 2024, John Smith was appointed CFO of TechNova Inc. He works alongside the current Senior VP Olivia Doe. He is currently overseeing the company’s global restructuring initiative, which began in May 2024 and is expected to continue into 2025.

Analysts believe this strategy may boost profitability, though others argue it risks employee morale. One investor stated, “I think Jane has the right vision.”

According to TechNova’s Q1 report, the company achieved a 10% increase in revenue compared to Q1 2023. It is expected that TechNova will launch its AI-driven product line in Q3 2025.

Since June 2024, TechNova Inc has been negotiating strategic partnerships in Asia. Meanwhile, it has also been expanding its presence in Europe, starting July 2024. As of September 2025, the company is piloting a remote-first work policy across all departments.

Competitor SkyTech announced last month they have developed a new AI chip and launched their cloud-based learning platform.

"""

Example Output: {

"statements": [

{

"statement": "Matt Taylor works at ABC Ltd.",

"statement_type": "FACT",

"temporal_type": "DYNAMIC"

},

{

"statement": "Matt Taylor is an Analyst.",

"statement_type": "FACT",

"temporal_type": "DYNAMIC"

},

{

"statement": "Taylor Morgan works at BigBank.",

"statement_type": "FACT",

"temporal_type": "DYNAMIC"

},

{

"statement": "Taylor Morgan is a Senior Coordinator.",

"statement_type": "FACT",

"temporal_type": "DYNAMIC"

},

{

"statement": "John Smith was appointed CFO of TechNova Inc on April 1st, 2024.",

"statement_type": "FACT",

"temporal_type": "STATIC"

},

{

"statement": "John Smith has held position CFO of TechNova Inc from April 1st, 2024.",

"statement_type": "FACT",

"temporal_type": "DYNAMIC"

},

{

"statement": "Olivia Doe is the Senior VP of TechNova Inc.",

"statement_type": "FACT",

"temporal_type": "DYNAMIC"

},

{

"statement": "John Smith works with Olivia Doe.",

"statement_type": "FACT",

"temporal_type": "DYNAMIC"

},

{

"statement": "John Smith is overseeing TechNova Inc's global restructuring initiative starting May 2024.",

"statement_type": "FACT",

"temporal_type": "DYNAMIC"

},

{

"statement": "Analysts believe TechNova Inc's strategy may boost profitability.",

"statement_type": "OPINION",

"temporal_type": "STATIC"

},

{

"statement": "Some argue that TechNova Inc's strategy risks employee morale.",

"statement_type": "OPINION",

"temporal_type": "STATIC"

},

{

"statement": "An investor stated 'I think John has the right vision' on an unspecified date.",

"statement_type": "OPINION",

"temporal_type": "STATIC"

},

{

"statement": "TechNova Inc achieved a 10% increase in revenue in Q1 2024 compared to Q1 2023.",

"statement_type": "FACT",

"temporal_type": "DYNAMIC"

},

{

"statement": "It is expected that TechNova Inc will launch its AI-driven product line in Q3 2025.",

"statement_type": "PREDICTION",

"temporal_type": "DYNAMIC"

},

{

"statement": "TechNova Inc started negotiating strategic partnerships in Asia in June 2024.",

"statement_type": "FACT",

"temporal_type": "STATIC"

},

{

"statement": "TechNova Inc has been negotiating strategic partnerships in Asia since June 2024.",

"statement_type": "FACT",

"temporal_type": "DYNAMIC"

},

{

"statement": "TechNova Inc has been expanding its presence in Europe since July 2024.",

"statement_type": "FACT",

"temporal_type": "DYNAMIC"

},

{

"statement": "TechNova Inc started expanding its presence in Europe in July 2024.",

"statement_type": "FACT",

"temporal_type": "STATIC"

},

{

"statement": "TechNova Inc is going to pilot a remote-first work policy across all departments as of September 2025.",

"statement_type": "FACT",

"temporal_type": "STATIC"

},

{

"statement": "SkyTech is a competitor of TechNova.",

"statement_type": "FACT",

"temporal_type": "DYNAMIC"

},

{

"statement": "SkyTech developed new AI chip.",

"statement_type": "FACT",

"temporal_type": "STATIC"

},

{

"statement": "SkyTech launched cloud-based learning platform.",

"statement_type": "FACT",

"temporal_type": "STATIC"

}

]

}

===End of Examples===

**Output format**

Return only a list of extracted labelled statements in the JSON ARRAY of objects that match the schema below:

{{ json_schema }}

'''3.2.5. Temporal Range Extraction

Raw temporal range

The RawTemporalRange model holds the raw extraction of valid_at and invalid_at date strings for a statement. These both use the date-time supported string property.

valid_atrepresents the start of the validity period for a statementinvalid_atrepresents the end of the validity period for a statement

class RawTemporalRange(BaseModel):

"""Model representing the raw temporal validity range as strings."""

valid_at: str | None = Field(..., json_schema_extra={"format": "date-time"})

invalid_at: str | None = Field(..., json_schema_extra={"format": "date-time"})Temporal validity range

While the RawTemporalRange model preserves the originally extracted date strings, the TemporalValidityRange model transforms these into standardized datetime objects for downstream processing.

It parses the raw valid_at and invalid_at values, converting them from strings into timezone-aware datetime instances. This is handled through a field-level validator.

from utils import parse_date_str

class TemporalValidityRange(BaseModel):

"""Model representing the parsed temporal validity range as datetimes."""

valid_at: datetime | None = None

invalid_at: datetime | None = None

@field_validator("valid_at", "invalid_at", mode="before")

@classmethod

def _parse_date_string(cls, value: str | datetime | None) -> datetime | None:

if isinstance(value, datetime) or value is None:

return value

return parse_date_str(value)Date extraction prompt

Let's now create the prompt that guides our Temporal Agent in accurately determining the temporal validity of statements.

Anatomy of the prompt

This prompt helps the Temporal Agent precisely understand and extract temporal validity ranges.

-

Clearly Defines the Extraction Task

The prompt instructs our model to determine when a statement became true (

valid_at) and optionally when it stopped being true (invalid_at). -

Uses Contextual Guidance

By dynamically incorporating

{{ inputs.temporal_type }}and{{ inputs.statement_type }}, the prompt guides the model in interpreting temporal nuances based on the nature of each statement (like distinguishing facts from predictions or static from dynamic contexts). -

Ensures Consistency with Clear Formatting Rules

To maintain clarity and consistency, the prompt requires all dates to be converted into standardized ISO 8601 date-time formats, normalized to UTC. It explicitly anchors relative expressions (like "last quarter") to known publication dates, making temporal information precise and reliable.

-

Aligns with Business Reporting Cycles

Recognizing the practical need for quarter-based reasoning common in business and financial contexts, the prompt can interpret and calculate temporal ranges based on business quarters, minimizing ambiguity.

-

Adapts to Statement Types for Semantic Accuracy

Specific rules ensure the semantic integrity of statements—for example, opinions might only have a start date (

valid_at) reflecting the moment they were expressed, while predictions will clearly define their forecast window using an end date (invalid_at).

date_extraction_prompt = """

{#

This prompt (template) is adapted from [getzep/graphiti]

Licensed under the Apache License, Version 2.0

Original work:

https://github.com/getzep/graphiti/blob/main/graphiti_core/prompts/extract_edge_dates.py

Modifications made by Tomoro on 2025-04-14

See the LICENSE file for the full Apache 2.0 license text.

#}

{% macro tidy(name) -%}

{{ name.replace('_', ' ')}}

{%- endmacro %}

INPUTS:

{% if inputs %}

{% for key, val in inputs.items() %}

- {{ key }}: {{val}}

{% endfor %}

{% endif %}

TASK:

- Analyze the statement and determine the temporal validity range as dates for the temporal event or relationship described.

- Use the temporal information you extracted, guidelines below, and date of when the statement was made or published. Do not use any external knowledge to determine validity ranges.

- Only set dates if they explicitly relate to the validity of the relationship described in the statement. Otherwise ignore the time mentioned.

- If the relationship is not of spanning nature and represents a single point in time, but you are still able to determine the date of occurrence, set the valid_at only.

{{ inputs.get("temporal_type") | upper }} Temporal Type Specific Guidance:

{% for key, guide in temporal_guide.items() %}

- {{ tidy(key) | capitalize }}: {{ guide }}

{% endfor %}

{{ inputs.get("statement_type") | upper }} Statement Type Specific Guidance:

{%for key, guide in statement_guide.items() %}

- {{ tidy(key) | capitalize }}: {{ guide }}

{% endfor %}

Validity Range Definitions:

- `valid_at` is the date and time when the relationship described by the statement became true or was established.

- `invalid_at` is the date and time when the relationship described by the statement stopped being true or ended. This may be None if the event is ongoing.

General Guidelines:

1. Use ISO 8601 format (YYYY-MM-DDTHH:MM:SS.SSSSSSZ) for datetimes.

2. Use the reference or publication date as the current time when determining the valid_at and invalid_at dates.

3. If the fact is written in the present tense without containing temporal information, use the reference or publication date for the valid_at date

4. Do not infer dates from related events or external knowledge. Only use dates that are directly stated to establish or change the relationship.

5. Convert relative times (e.g., “two weeks ago”) into absolute ISO 8601 datetimes based on the reference or publication timestamp.

6. If only a date is mentioned without a specific time, use 00:00:00 (midnight) for that date.

7. If only year or month is mentioned, use the start or end as appropriate at 00:00:00 e.g. do not select a random date if only the year is mentioned, use YYYY-01-01 or YYYY-12-31.

8. Always include the time zone offset (use Z for UTC if no specific time zone is mentioned).

{% if inputs.get('quarter') and inputs.get('publication_date') %}

9. Assume that {{ inputs.quarter }} ends on {{ inputs.publication_date }} and infer dates for any Qx references from there.

{% endif %}

Statement Specific Rules:

- when `statement_type` is **opinion** only valid_at must be set

- when `statement_type` is **prediction** set its `invalid_at` to the **end of the prediction window** explicitly mentioned in the text.

Never invent dates from outside knowledge.

**Output format**

Return only the validity range in the JSON ARRAY of objects that match the schema below:

{{ json_schema }}

"""3.2.6. Creating our Triplets

We will now build up the definitions and prompts to create the our triplets. As discussed above, these are a combination of:

- Subject - the entity you are talking about

- Predicate - the type of relationship or property

- Object - the value or other entity that the subject is connected to

Let's start with our predicate.

Predicate

The Predicate enum provides a standard set of predicates that clearly describe relationships extracted from text.

We've defined the set of predicates below to be appropriate for earnings call transcripts. Here are some examples for how each of these predicates could fit into a triplet in our knowledge graph: Here are more anonymized, generalized examples following your template:

IS_A: [Company ABC]-[IS_A]-[Software Provider]HAS_A: [Corporation XYZ]-[HAS_A]-[Innovation Division]LOCATED_IN: [Factory 123]-[LOCATED_IN]-[Germany]HOLDS_ROLE: [Jane Doe]-[HOLDS_ROLE]-[CEO at Company LMN]PRODUCES: [Company DEF]-[PRODUCES]-[Smartphone Model X]SELLS: [Retailer 789]-[SELLS]-[Furniture]LAUNCHED: [Company UVW]-[LAUNCHED]-[New Subscription Service]DEVELOPED: [Startup GHI]-[DEVELOPED]-[Cloud-Based Tool]ADOPTED_BY: [New Technology]-[ADOPTED_BY]-[Industry ABC]INVESTS_IN: [Investment Firm JKL]-[INVESTS_IN]-[Clean Energy Startups]COLLABORATES_WITH: [Company PQR]-[COLLABORATES_WITH]-[University XYZ]SUPPLIES: [Manufacturer STU]-[SUPPLIES]-[Auto Components to Company VWX]HAS_REVENUE: [Corporation LMN]-[HAS_REVENUE]-[€500 Million]INCREASED: [Company YZA]-[INCREASED]-[Market Share]DECREASED: [Firm BCD]-[DECREASED]-[Operating Expenses]RESULTED_IN: [Cost Reduction Initiative]-[RESULTED_IN]-[Improved Profit Margins]TARGETS: [Product Launch Campaign]-[TARGETS]-[Millennial Consumers]PART_OF: [Subsidiary EFG]-[PART_OF]-[Parent Corporation HIJ]DISCONTINUED: [Company KLM]-[DISCONTINUED]-[Legacy Product Line]SECURED: [Startup NOP]-[SECURED]-[Series B Funding]

class Predicate(StrEnum):

"""Enumeration of normalised predicates."""

IS_A = "IS_A"

HAS_A = "HAS_A"

LOCATED_IN = "LOCATED_IN"

HOLDS_ROLE = "HOLDS_ROLE"

PRODUCES = "PRODUCES"

SELLS = "SELLS"

LAUNCHED = "LAUNCHED"

DEVELOPED = "DEVELOPED"

ADOPTED_BY = "ADOPTED_BY"

INVESTS_IN = "INVESTS_IN"

COLLABORATES_WITH = "COLLABORATES_WITH"

SUPPLIES = "SUPPLIES"

HAS_REVENUE = "HAS_REVENUE"

INCREASED = "INCREASED"

DECREASED = "DECREASED"

RESULTED_IN = "RESULTED_IN"

TARGETS = "TARGETS"

PART_OF = "PART_OF"

DISCONTINUED = "DISCONTINUED"

SECURED = "SECURED"We also assign a definition to each predicate, which we will then pass to the extraction prompt downstream.

PREDICATE_DEFINITIONS = {

"IS_A": "Denotes a class-or-type relationship between two entities (e.g., 'Model Y IS_A electric-SUV'). Includes 'is' and 'was'.",

"HAS_A": "Denotes a part-whole relationship between two entities (e.g., 'Model Y HAS_A electric-engine'). Includes 'has' and 'had'.",

"LOCATED_IN": "Specifies geographic or organisational containment or proximity (e.g., headquarters LOCATED_IN Berlin).",

"HOLDS_ROLE": "Connects a person to a formal office or title within an organisation (CEO, Chair, Director, etc.).",

"PRODUCES": "Indicates that an entity manufactures, builds, or creates a product, service, or infrastructure (includes scale-ups and component inclusion).",

"SELLS": "Marks a commercial seller-to-customer relationship for a product or service (markets, distributes, sells).",

"LAUNCHED": "Captures the official first release, shipment, or public start of a product, service, or initiative.",

"DEVELOPED": "Shows design, R&D, or innovation origin of a technology, product, or capability. Includes 'researched' or 'created'.",

"ADOPTED_BY": "Indicates that a technology or product has been taken up, deployed, or implemented by another entity.",

"INVESTS_IN": "Represents the flow of capital or resources from one entity into another (equity, funding rounds, strategic investment).",

"COLLABORATES_WITH": "Generic partnership, alliance, joint venture, or licensing relationship between entities.",

"SUPPLIES": "Captures vendor–client supply-chain links or dependencies (provides to, sources from).",

"HAS_REVENUE": "Associates an entity with a revenue amount or metric—actual, reported, or projected.",

"INCREASED": "Expresses an upward change in a metric (revenue, market share, output) relative to a prior period or baseline.",

"DECREASED": "Expresses a downward change in a metric relative to a prior period or baseline.",

"RESULTED_IN": "Captures a causal relationship where one event or factor leads to a specific outcome (positive or negative).",

"TARGETS": "Denotes a strategic objective, market segment, or customer group that an entity seeks to reach.",

"PART_OF": "Expresses hierarchical membership or subset relationships (division, subsidiary, managed by, belongs to).",

"DISCONTINUED": "Indicates official end-of-life, shutdown, or termination of a product, service, or relationship.",

"SECURED": "Marks the successful acquisition of funding, contracts, assets, or rights by an entity.",

}Defining your own predicates

When working with different data sources, you'll want to define your own predicates that are specific to your use case.

To define your own predicates:

- First, run your pipeline with

PREDICATE_DEFINITIONS = {}on a representative sample of your documents. This initial run will derive a noisy graph with many non-standardized and overlapping predicates - Next, drop some of your intial results into ChatGPT or manually review them to merge similar predicate classes. This process helps to eliminate duplicates such as

IS_CEOandIS_CEO_OF - Finally, carefully review and refine this list of predicates to ensure clarity and precision. These finalized predicate definitions will then guide your extraction process and ensure a consistent extraction pipeline

Raw triplet

With predicates now well-defined, we can begin building up the data models for our triplets.

The RawTriplet model represents a basic subject-predicate-object relationship that is extracted directly from textual data. This serves as a precursor for the more detailed triplet representation in Triplet which we introduce later.

Core fields:

subject_name: The textual representation of the subject entitysubject_id: Numeric identifier for the subject entitypredicate: The relationship type, specified by thePredicateenumobject_name: The textual representation of the object entityobject_id: Numeric identifier for the object entityvalue: Numeric value associated to relationship, may be None e.g.Company->HAS_A->Revenuewithvalue='$100 mill'

class RawTriplet(BaseModel):

"""Model representing a subject-predicate-object triplet."""

subject_name: str

subject_id: int

predicate: Predicate

object_name: str

object_id: int

value: str | None = NoneTriplet

The Triplet model extends the RawTriplet by incorporating unique identifiers and optionally linking each triplet to a specific event. These identifiers help with integration into structured knowledge bases like our temporal knowledge graph.

class Triplet(BaseModel):

"""Model representing a subject-predicate-object triplet."""

id: uuid.UUID = Field(default_factory=uuid.uuid4)

event_id: uuid.UUID | None = None

subject_name: str

subject_id: int | uuid.UUID

predicate: Predicate

object_name: str

object_id: int | uuid.UUID

value: str | None = None

@classmethod

def from_raw(cls, raw_triplet: "RawTriplet", event_id: uuid.UUID | None = None) -> "Triplet":

"""Create a Triplet instance from a RawTriplet, optionally associating it with an event_id."""

return cls(

id=uuid.uuid4(),

event_id=event_id,

subject_name=raw_triplet.subject_name,

subject_id=raw_triplet.subject_id,

predicate=raw_triplet.predicate,

object_name=raw_triplet.object_name,

object_id=raw_triplet.object_id,

value=raw_triplet.value,

)RawEntity

The RawEntity model represents an Entity as extracted from the Statement. This serves as a precursor for the more detailed triplet representation in Entity which we introduce next.

Core fields:

entity_idx: An integer to differentiate extracted entites from the statement (links toRawTriplet)name: The name of the entity extracted e.g.AMDtype: The type of entity extracted e.g.Companydescription: The textual description of the entity e.g.Technology company know for manufacturing semiconductors

class RawEntity(BaseModel):

"""Model representing an entity (for entity resolution)."""

entity_idx: int

name: str

type: str = ""

description: str = ""Entity

The Entity model extends the RawEntity by incorporating unique identifiers and optionally linking each entity to a specific event.

Additionally, it contains resolved_id which will be populated during entity resolution with the canonical entity's id to remove duplicate naming of entities in the database.

These updated identifiers help with integration and linking of entities to events and triplets .

class Entity(BaseModel):

"""

Model representing an entity (for entity resolution).

'id' is the canonical entity id if this is a canonical entity.

'resolved_id' is set to the canonical id if this is an alias.

"""

id: uuid.UUID = Field(default_factory=uuid.uuid4)

event_id: uuid.UUID | None = None

name: str

type: str

description: str

resolved_id: uuid.UUID | None = None

@classmethod

def from_raw(cls, raw_entity: "RawEntity", event_id: uuid.UUID | None = None) -> "Entity":

"""Create an Entity instance from a RawEntity, optionally associating it with an event_id."""

return cls(

id=uuid.uuid4(),

event_id=event_id,

name=raw_entity.name,

type=raw_entity.type,

description=raw_entity.description,

resolved_id=None,

)Raw extraction

Both RawTriplet and RawEntity are extracted at the same time per Statement to reduce LLM calls and to allow easy referencing of Entities through Triplets.

class RawExtraction(BaseModel):

"""Model representing a triplet extraction."""

triplets: list[RawTriplet]

entities: list[RawEntity]Triplet Extraction Prompt

The prompt below guides our Temporal Agent to effectively extract triplets and entities from provided statements.

Anatomy of the prompt

-

Avoids temporal details

The agent is specifically instructed to ignore temporal relationships, as these are captured separately within the

TemporalValidityRange. DefinedPredicatesare deliberately designed to be time-neutral—for instance,HAS_Acovers both present (HAS_A) and past (HAD_A) contexts. -

Maintains structured outputs

The prompt yields structured

RawExtractionoutputs, supported by detailed examples that clearly illustrate:- How to extract information from a given

Statement - How to link

Entitieswith correspondingTriplets - How to handle extracted

values - How to manage multiple

Tripletsinvolving the sameEntity

- How to extract information from a given

triplet_extraction_prompt = """

You are an information-extraction assistant.

**Task:** You are going to be given a statement. Proceed step by step through the guidelines.

**Statement:** "{{ statement }}"

**Guidelines**

First, NER:

- Identify the entities in the statement, their types, and context independent descriptions.

- Do not include any lengthy quotes from the reports

- Do not include any calendar dates or temporal ranges or temporal expressions

- Numeric values should be extracted as separate entities as an instance_of _Numeric_, where the name is the units as a string and the numeric_value is the value. e.g: £30 -> name: 'GBP', numeric_value: 30, instance_of: 'Numeric'

Second, Triplet extraction:

- Identify the subject entity of that predicate – the main entity carrying out the action or being described.

- Identify the object entity of that predicate – the entity, value, or concept that the predicate affects or describes.

- Identify a predicate between the entities expressed in the statement, such as 'is', 'works at', 'believes', etc. Follow the schema below if given.

- Extract the corresponding (subject, predicate, object, date) knowledge triplet.

- Exclude all temporal expressions (dates, years, seasons, etc.) from every field.

- Repeat until all predicates contained in the statement have been extracted form the statements.

{%- if predicate_instructions -%}

-------------------------------------------------------------------------

Predicate Instructions:

Please try to stick to the following predicates, do not deviate unless you can't find a relevant definition.

{%- for pred, instruction in predicate_instructions.items() -%}

- {{ pred }}: {{ instruction }}

{%- endfor -%}

-------------------------------------------------------------------------

{%- endif -%}

Output:

List the entities and triplets following the JSON schema below. Return ONLY with valid JSON matching this schema.

Do not include any commentary or explanation.

{{ json_schema }}

===Examples===

Example 1 Statement: "Google's revenue increased by 10% from January through March."

Example 1 Output: {

"triplets": [

{

"subject_name": "Google",

"subject_id": 0,

"predicate": "INCREASED",

"object_name": "Revenue",

"object_id": 1,

"value": "10%",

}

],

"entities": [

{

"entity_idx": 0,

"name": "Google",

"type": "Organization",

"description": "Technology Company",

},

{

"entity_idx": 1,

"name": "Revenue",

"type": "Financial Metric",

"description": "Income of a Company",

}

]

}

Example 2 Statement: "Amazon developed a new AI chip in 2024."

Example 2 Output:

{

"triplets": [

{

"subject_name": "Amazon",

"subject_id": 0,

"predicate": "DEVELOPED",

"object_name": "AI chip",

"object_id": 1,

"value": None,

},

],

"entities": [

{

"entity_idx": 0,

"name": "Amazon",

"type": "Organization",

"description": "E-commerce and cloud computing company"

},

{

"entity_idx": 1,

"name": "AI chip",

"type": "Technology",

"description": "Artificial intelligence accelerator hardware"

}

]

}

Example 3 Statement: "It is expected that TechNova Inc will launch its AI-driven product line in Q3 2025.",

Example 3 Output:{

"triplets": [

{

"subject_name": "TechNova",

"subject_id": 0,

"predicate": "LAUNCHED",

"object_name": "AI-driven Product",

"object_id": 1,

"value": "None,

}

],

"entities": [

{

"entity_idx": 0,

"name": "TechNova",

"type": "Organization",

"description": "Technology Company",

},

{

"entity_idx": 1,

"name": "AI-driven Product",

"type": "Product",

"description": "General AI products",

}

]

}

Example 4 Statement: "The SVP, CFO and Treasurer of AMD spoke during the earnings call."

Example 4 Output: {

"triplets": [],

"entities":[].

}

===End of Examples===

"""3.2.7. Temporal Event

The TemporalEvent model brings together the Statement and all related information into one handy class. It's a primary output of the TemporalAgent and plays an important role within the InvalidationAgent.

Main fields include:

id: A unique identifier for the eventchunk_id: Points to the specificChunkassociated with the eventstatement: The specificRawStatementextracted from theChunkdetailing a relationship or eventembedding: A representation of thestatementused by theInvalidationAgentto gauge event similaritytriplets: Unique identifiers for the individualTripletsextracted from theStatementvalid_at: Timestamp indicating when the event becomes validinvalid_at: Timestamp indicating when the event becomes invalidtemporal_type: Describes temporal characteristics from theRawStatementstatement_type: Categorizes the statement according to the originalRawStatementcreated_at: Date the event was first created.expired_at: Date the event was marked invalid (set tocreated_atifinvalid_atis already set when building theTemporalEvent)invalidated_by: ID of theTemporalEventresponsible for invalidating this event, if applicable

import json

from pydantic import model_validator

class TemporalEvent(BaseModel):

"""Model representing a temporal event with statement, triplet, and validity information."""

id: uuid.UUID = Field(default_factory=uuid.uuid4)

chunk_id: uuid.UUID

statement: str

embedding: list[float] = Field(default_factory=lambda: [0.0] * 256)

triplets: list[uuid.UUID]

valid_at: datetime | None = None

invalid_at: datetime | None = None

temporal_type: TemporalType

statement_type: StatementType

created_at: datetime = Field(default_factory=datetime.now)

expired_at: datetime | None = None

invalidated_by: uuid.UUID | None = None

@property

def triplets_json(self) -> str:

"""Convert triplets list to JSON string."""

return json.dumps([str(t) for t in self.triplets]) if self.triplets else "[]"

@classmethod

def parse_triplets_json(cls, triplets_str: str) -> list[uuid.UUID]:

"""Parse JSON string back into list of UUIDs."""

if not triplets_str or triplets_str == "[]":

return []

return [uuid.UUID(t) for t in json.loads(triplets_str)]

@model_validator(mode="after")

def set_expired_at(self) -> "TemporalEvent":

"""Set expired_at if invalid_at is set and temporal_type is DYNAMIC."""

self.expired_at = self.created_at if (self.invalid_at is not None) and (self.temporal_type == TemporalType.DYNAMIC) else None

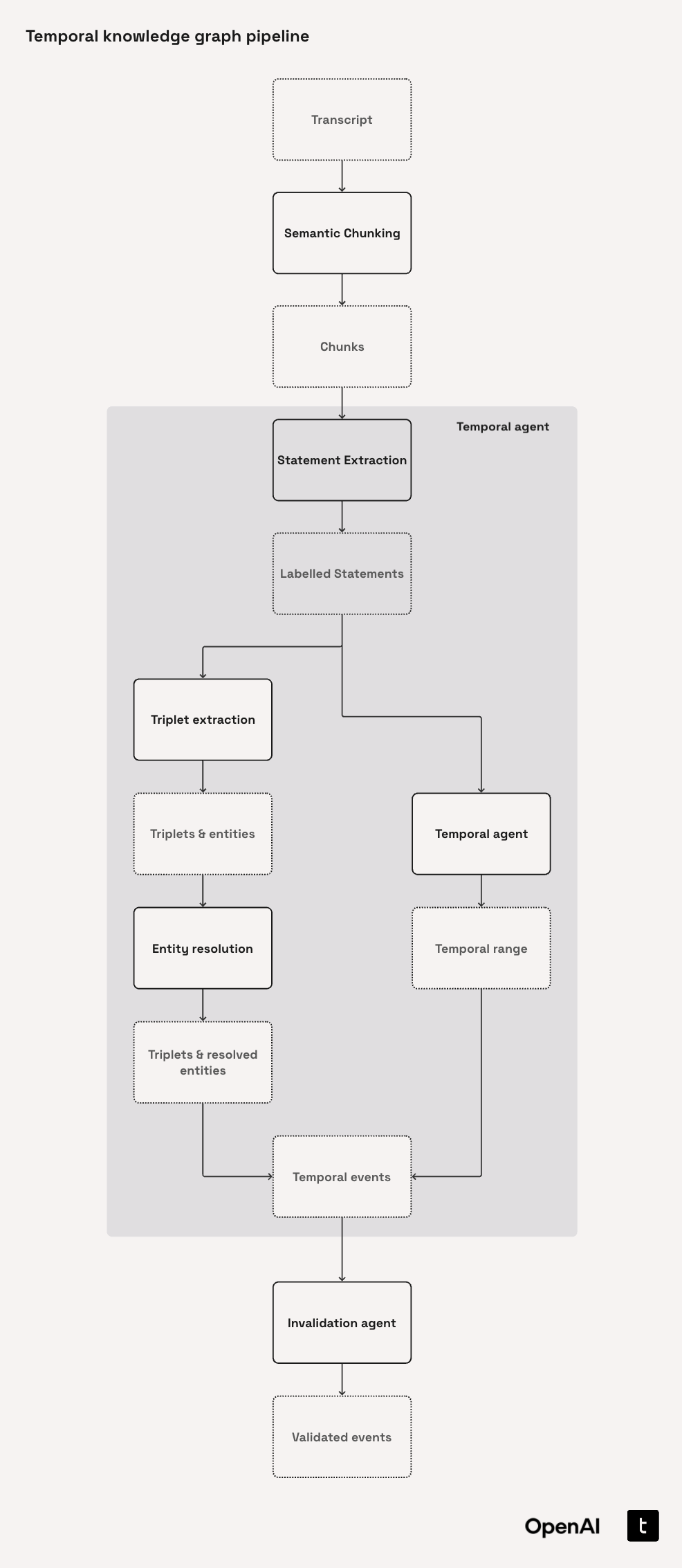

return self3.2.8. Defining our Temporal Agent

Now we arrive at a central point in our pipeline: The TemporalAgent class. This brings together the steps we've built up above - chunking, data models, and prompts. Let's take a closer look at how this works.

The core function, extract_transcript_events, handles all key processes:

- It extracts a

RawStatementfrom eachChunk. - From each

RawStatement, it identifies theTemporalValidityRangealong with lists of relatedTripletandEntityobjects. - Finally, it bundles all this information neatly into a

TemporalEventfor eachRawStatement.

Here's what you'll get:

transcript: The transcript currently being analyzed.all_events: A comprehensive list of all generatedTemporalEventobjects.all_triplets: A complete collection ofTripletobjects extracted across all events.all_entities: A detailed list of allEntityobjects pulled from the events, which will be further refined in subsequent steps.

The diagram below visualizes this portion of our pipeline:

import asyncio

from typing import Any

from jinja2 import DictLoader, Environment

from openai import AsyncOpenAI

from tenacity import retry, stop_after_attempt, wait_random_exponential

class TemporalAgent:

"""Handles temporal-based operations for extracting and processing temporal events from text."""

def __init__(self) -> None:

"""Initialize the TemporalAgent with a client."""

self._client = AsyncOpenAI()

self._model = "gpt-4.1-mini"

self._env = Environment(loader=DictLoader({

"statement_extraction.jinja": statement_extraction_prompt,

"date_extraction.jinja": date_extraction_prompt,

"triplet_extraction.jinja": triplet_extraction_prompt,

}))

self._env.filters["split_and_capitalize"] = self.split_and_capitalize

@staticmethod

def split_and_capitalize(value: str) -> str:

"""Split dict key string and reformat for jinja prompt."""

return " ".join(value.split("_")).capitalize()

async def get_statement_embedding(self, statement: str) -> list[float]:

"""Get the embedding of a statement."""

response = await self._client.embeddings.create(

model="text-embedding-3-large",

input=statement,

dimensions=256,

)

return response.data[0].embedding

@retry(wait=wait_random_exponential(multiplier=1, min=1, max=30), stop=stop_after_attempt(3))

async def extract_statements(

self,

chunk: Chunk,

inputs: dict[str, Any],

) -> RawStatementList:

"""Determine initial validity date range for a statement.

Args:

chunk (Chunk): The chunk of text to analyze.

inputs (dict[str, Any]): Additional input parameters for extraction.

Returns:

Statement: Statement with updated temporal range.

"""

inputs["chunk"] = chunk.text

template = self._env.get_template("statement_extraction.jinja")

prompt = template.render(

inputs=inputs,

definitions=LABEL_DEFINITIONS,

json_schema=RawStatementList.model_fields,

)

response = await self._client.responses.parse(

model=self._model,

temperature=0,

input=prompt,

text_format=RawStatementList,

)

raw_statements = response.output_parsed

statements = RawStatementList.model_validate(raw_statements)

return statements

@retry(wait=wait_random_exponential(multiplier=1, min=1, max=30), stop=stop_after_attempt(3))

async def extract_temporal_range(

self,

statement: RawStatement,

ref_dates: dict[str, Any],

) -> TemporalValidityRange:

"""Determine initial validity date range for a statement.

Args:

statement (Statement): Statement to analyze.

ref_dates (dict[str, Any]): Reference dates for the statement.

Returns:

Statement: Statement with updated temporal range.

"""

if statement.temporal_type == TemporalType.ATEMPORAL:

return TemporalValidityRange(valid_at=None, invalid_at=None)

template = self._env.get_template("date_extraction.jinja")

inputs = ref_dates | statement.model_dump()

prompt = template.render(

inputs=inputs,

temporal_guide={statement.temporal_type.value: LABEL_DEFINITIONS["temporal_labelling"][statement.temporal_type.value]},

statement_guide={statement.statement_type.value: LABEL_DEFINITIONS["episode_labelling"][statement.statement_type.value]},

json_schema=RawTemporalRange.model_fields,

)

response = await self._client.responses.parse(

model=self._model,

temperature=0,

input=prompt,

text_format=RawTemporalRange,

)

raw_validity = response.output_parsed

temp_validity = TemporalValidityRange.model_validate(raw_validity.model_dump()) if raw_validity else TemporalValidityRange()

if temp_validity.valid_at is None:

temp_validity.valid_at = inputs["publication_date"]

if statement.temporal_type == TemporalType.STATIC:

temp_validity.invalid_at = None

return temp_validity

@retry(wait=wait_random_exponential(multiplier=1, min=1, max=30), stop=stop_after_attempt(3))

async def extract_triplet(