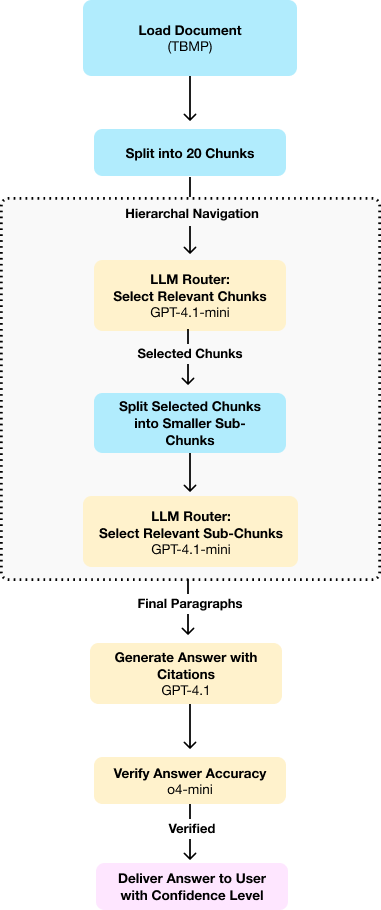

Split document into 20 chunks

Chunk 0: 42326 tokens

Chunk 1: 42093 tokens

Chunk 2: 42107 tokens

Chunk 3: 39797 tokens

Chunk 4: 58959 tokens

Chunk 5: 48805 tokens

Chunk 6: 37243 tokens

Chunk 7: 33453 tokens

Chunk 8: 38644 tokens

Chunk 9: 49402 tokens

Chunk 10: 51568 tokens

Chunk 11: 49586 tokens

Chunk 12: 47722 tokens

Chunk 13: 48952 tokens

Chunk 14: 44994 tokens

Chunk 15: 50286 tokens

Chunk 16: 54424 tokens

Chunk 17: 62651 tokens

Chunk 18: 47430 tokens

Chunk 19: 42507 tokens

==== ROUTING AT DEPTH 0 ====

Evaluating 20 chunks for relevance

Selected chunks: 0, 1, 2, 3, 4, 5, 6, 7, 8

Updated scratchpad:

DEPTH 0 REASONING:

The user wants to know the format requirements for filing a motion to compel discovery and how signatures should be handled for such motions.

Based on the evaluation of chunks:

- Chunks 0, 1, 2, 3, 4, 5, 6, 7, 8 are highly relevant since they cover general requirements for submissions, motions, signatures, service, and specifically for motions and discovery in TTAB proceedings.

- These chunks contain detailed info about electronic filing (via ESTTA), paper filing exceptions, signature requirements, service requirements, format of submissions (including motions), timing rules, and professionals' responsibilities.

- Additionally, the rules for motions to compel, including required attachments, timing, and certification of good faith efforts to resolve discovery disputes, are specifically outlined.

- Chunks 11-19 mostly cover post-trial and appeal procedures, less directly relevant.

I will select these relevant chunks to provide a thorough answer about how motions to compel discovery should be filed and how signatures on such motions are handled.

Split document into 20 chunks

Chunk 0: 3539 tokens

Chunk 1: 2232 tokens

Chunk 2: 1746 tokens

Chunk 3: 3078 tokens

Chunk 4: 1649 tokens

Chunk 5: 2779 tokens

Chunk 6: 2176 tokens

Chunk 7: 1667 tokens

Chunk 8: 1950 tokens

Chunk 9: 1730 tokens

Chunk 10: 1590 tokens

Chunk 11: 1964 tokens

Chunk 12: 1459 tokens

Chunk 13: 2070 tokens

Chunk 14: 2422 tokens

Chunk 15: 1976 tokens

Chunk 16: 2335 tokens

Chunk 17: 2694 tokens

Chunk 18: 2282 tokens

Chunk 19: 982 tokens

Split document into 20 chunks

Chunk 0: 2880 tokens

Chunk 1: 1323 tokens

Chunk 2: 2088 tokens

Chunk 3: 1493 tokens

Chunk 4: 2466 tokens

Chunk 5: 2563 tokens

Chunk 6: 2981 tokens

Chunk 7: 2723 tokens

Chunk 8: 2264 tokens

Chunk 9: 1900 tokens

Chunk 10: 2134 tokens

Chunk 11: 1778 tokens

Chunk 12: 2484 tokens

Chunk 13: 1922 tokens

Chunk 14: 2237 tokens

Chunk 15: 2044 tokens

Chunk 16: 2097 tokens

Chunk 17: 1326 tokens

Chunk 18: 2427 tokens

Chunk 19: 962 tokens

Split document into 20 chunks

Chunk 0: 2341 tokens

Chunk 1: 1724 tokens

Chunk 2: 2042 tokens

Chunk 3: 3225 tokens

Chunk 4: 1617 tokens

Chunk 5: 2247 tokens

Chunk 6: 1741 tokens

Chunk 7: 1914 tokens

Chunk 8: 2027 tokens

Chunk 9: 2596 tokens

Chunk 10: 2366 tokens

Chunk 11: 2164 tokens

Chunk 12: 2471 tokens

Chunk 13: 1821 tokens

Chunk 14: 1496 tokens

Chunk 15: 1712 tokens

Chunk 16: 1909 tokens

Chunk 17: 1961 tokens

Chunk 18: 2309 tokens

Chunk 19: 2419 tokens

Split document into 20 chunks

Chunk 0: 2304 tokens

Chunk 1: 2140 tokens

Chunk 2: 1845 tokens

Chunk 3: 3053 tokens

Chunk 4: 2008 tokens

Chunk 5: 2052 tokens

Chunk 6: 2240 tokens

Chunk 7: 1943 tokens

Chunk 8: 1732 tokens

Chunk 9: 1507 tokens

Chunk 10: 1453 tokens

Chunk 11: 1976 tokens

Chunk 12: 1871 tokens

Chunk 13: 1620 tokens

Chunk 14: 1906 tokens

Chunk 15: 1558 tokens

Chunk 16: 1889 tokens

Chunk 17: 2233 tokens

Chunk 18: 2208 tokens

Chunk 19: 2259 tokens

Split document into 20 chunks

Chunk 0: 4620 tokens

Chunk 1: 3446 tokens

Chunk 2: 1660 tokens

Chunk 3: 3203 tokens

Chunk 4: 4373 tokens

Chunk 5: 4233 tokens

Chunk 6: 3651 tokens

Chunk 7: 3820 tokens

Chunk 8: 3018 tokens

Chunk 9: 3018 tokens

Chunk 10: 4201 tokens

Chunk 11: 3043 tokens

Chunk 12: 2438 tokens

Chunk 13: 3295 tokens

Chunk 14: 2578 tokens

Chunk 15: 2423 tokens

Chunk 16: 1386 tokens

Chunk 17: 1482 tokens

Chunk 18: 1615 tokens

Chunk 19: 1454 tokens

Split document into 20 chunks

Chunk 0: 1468 tokens

Chunk 1: 1946 tokens

Chunk 2: 2020 tokens

Chunk 3: 3384 tokens

Chunk 4: 2458 tokens

Chunk 5: 3535 tokens

Chunk 6: 3059 tokens

Chunk 7: 2027 tokens

Chunk 8: 2417 tokens

Chunk 9: 2772 tokens

Chunk 10: 1913 tokens

Chunk 11: 2674 tokens

Chunk 12: 2131 tokens

Chunk 13: 1409 tokens

Chunk 14: 3256 tokens

Chunk 15: 2827 tokens

Chunk 16: 2547 tokens

Chunk 17: 4187 tokens

Chunk 18: 1527 tokens

Chunk 19: 1246 tokens

Split document into 20 chunks

Chunk 0: 1272 tokens

Chunk 1: 1646 tokens

Chunk 2: 1643 tokens

Chunk 3: 2279 tokens

Chunk 4: 1451 tokens

Chunk 5: 1635 tokens

Chunk 6: 1983 tokens

Chunk 7: 1337 tokens

Chunk 8: 1820 tokens

Chunk 9: 2269 tokens

Chunk 10: 2894 tokens

Chunk 11: 2176 tokens

Chunk 12: 1401 tokens

Chunk 13: 1882 tokens

Chunk 14: 2114 tokens

Chunk 15: 2240 tokens

Chunk 16: 1900 tokens

Chunk 17: 1550 tokens

Chunk 18: 1713 tokens

Chunk 19: 2035 tokens

Split document into 20 chunks

Chunk 0: 2694 tokens

Chunk 1: 1808 tokens

Chunk 2: 1874 tokens

Chunk 3: 1328 tokens

Chunk 4: 1552 tokens

Chunk 5: 1436 tokens

Chunk 6: 1367 tokens

Chunk 7: 1333 tokens

Chunk 8: 978 tokens

Chunk 9: 1303 tokens

Chunk 10: 1738 tokens

Chunk 11: 1509 tokens

Chunk 12: 1875 tokens

Chunk 13: 1524 tokens

Chunk 14: 1597 tokens

Chunk 15: 1807 tokens

Chunk 16: 2449 tokens

Chunk 17: 2271 tokens

Chunk 18: 1467 tokens

Chunk 19: 1540 tokens

Split document into 20 chunks

Chunk 0: 1597 tokens

Chunk 1: 1554 tokens

Chunk 2: 1685 tokens

Chunk 3: 1416 tokens

Chunk 4: 1702 tokens

Chunk 5: 1575 tokens

Chunk 6: 1842 tokens

Chunk 7: 1981 tokens

Chunk 8: 1393 tokens

Chunk 9: 1562 tokens

Chunk 10: 1569 tokens

Chunk 11: 1898 tokens

Chunk 12: 3186 tokens

Chunk 13: 2337 tokens

Chunk 14: 1889 tokens

Chunk 15: 1948 tokens

Chunk 16: 1628 tokens

Chunk 17: 3544 tokens

Chunk 18: 2454 tokens

Chunk 19: 1882 tokens

==== ROUTING AT DEPTH 1 ====

Evaluating 180 chunks for relevance

Selected chunks: 5, 6, 7, 17, 18, 19, 20, 400, 401, 408, 410

Updated scratchpad:

DEPTH 0 REASONING:

The user wants to know the format requirements for filing a motion to compel discovery and how signatures should be handled for such motions.

Based on the evaluation of chunks:

- Chunks 0, 1, 2, 3, 4, 5, 6, 7, 8 are highly relevant since they cover general requirements for submissions, motions, signatures, service, and specifically for motions and discovery in TTAB proceedings.

- These chunks contain detailed info about electronic filing (via ESTTA), paper filing exceptions, signature requirements, service requirements, format of submissions (including motions), timing rules, and professionals' responsibilities.

- Additionally, the rules for motions to compel, including required attachments, timing, and certification of good faith efforts to resolve discovery disputes, are specifically outlined.

- Chunks 11-19 mostly cover post-trial and appeal procedures, less directly relevant.

I will select these relevant chunks to provide a thorough answer about how motions to compel discovery should be filed and how signatures on such motions are handled.

DEPTH 1 REASONING:

The user's question asks about the format requirements for filing a motion to compel discovery and how signatures should be handled. Relevant information will likely involve sections on "motions" specifically "motion to compel discovery," filing format, signature requirements, and related procedural rules in TTAB practice.

Based on the large amount and depth of the provided chunks, I identified the following relevant topics and chunks addressing them:

1. Signature Requirements & Acceptable Formats for Motions and Submissions

- Detailed rules for signatures on submissions including motions are in chunks 5, 6, 7.

- These include rules on electronic filing, use of ESTTA, required signature format including electronic signatures with the symbol method "/sig/".

2. Format of Submissions and Use of ESTTA

- Filing requirements, printing format, size, paper submissions, and special exceptions are found in chunks 7, 8, 9, 10, 11, 12, 13.

- Motions generally must be filed via ESTTA, with exceptions requiring petitions to Director with reasons.

3. Motions to Compel and Discovery Motions

- Specific rules related to filing motions such as motions to compel discovery, service, and timing are expected in the portions covering discovery and motions.

- Discovery and related motions are introduced in chapters starting from chunk 400 and beyond.

4. Service and Certificates of Service

- How motions must be served and proof of service with certificates is discussed in chunks 17, 18, 19, 20.

- These include requirements that every submission in inter partes cases, except notice of opposition or petition to cancel, must be served on adversary and proof of service provided.

5. Motions to Compel Discovery Details

- Discovery and motion procedure, filing format, timing, service, and related sanctions are extensively covered in chunks 400 and following.

- These include disclosures, discovery conferences, timing for discovery requests, responses, motions to compel, and sanctions.

From the above, the following chunks are most likely to provide the requested information:

- Chunks 5, 6, 7: Signature rules and filing format including motions.

- Chunks 17, 18, 19, 20: Service of submissions and certificates of service.

- Chunks 400 to 410 plus related portions (401.01, 401.02, 401.03, 408, 410): Discovery rules, motions to compel details.

These cover the format of motions including motions to compel discovery, signature rules, service and proof of service, and discovery procedure and rules governing motions.

Less relevant chunks to the question are routine procedural provisions on oppositions, petitions to cancel, answers, which do not specifically address filing or signatures of motions to compel discovery.

Plan: Select the above relevant chunks and report key procedural points on the format in which a motion to compel discovery must be filed and how signatures must be handled.

Split document into 8 chunks

Chunk 0: 398 tokens

Chunk 1: 256 tokens

Chunk 2: 389 tokens

Chunk 3: 356 tokens

Chunk 4: 401 tokens

Chunk 5: 277 tokens

Chunk 6: 435 tokens

Chunk 7: 265 tokens

Split document into 6 chunks

Chunk 0: 353 tokens

Chunk 1: 393 tokens

Chunk 2: 388 tokens

Chunk 3: 398 tokens

Chunk 4: 397 tokens

Chunk 5: 247 tokens

Split document into 5 chunks

Chunk 0: 325 tokens

Chunk 1: 389 tokens

Chunk 2: 303 tokens

Chunk 3: 344 tokens

Chunk 4: 306 tokens

Split document into 8 chunks

Chunk 0: 396 tokens

Chunk 1: 354 tokens

Chunk 2: 361 tokens

Chunk 3: 378 tokens

Chunk 4: 388 tokens

Chunk 5: 394 tokens

Chunk 6: 361 tokens

Chunk 7: 61 tokens

Split document into 7 chunks

Chunk 0: 396 tokens

Chunk 1: 355 tokens

Chunk 2: 377 tokens

Chunk 3: 362 tokens

Chunk 4: 326 tokens

Chunk 5: 397 tokens

Chunk 6: 69 tokens

Split document into 3 chunks

Chunk 0: 388 tokens

Chunk 1: 373 tokens

Chunk 2: 221 tokens

Split document into 8 chunks

Chunk 0: 360 tokens

Chunk 1: 314 tokens

Chunk 2: 369 tokens

Chunk 3: 363 tokens

Chunk 4: 361 tokens

Chunk 5: 393 tokens

Chunk 6: 361 tokens

Chunk 7: 358 tokens

==== ROUTING AT DEPTH 2 ====

Evaluating 45 chunks for relevance

Selected chunks: 0, 4, 5, 6, 7, 8, 9, 10, 11, 12, 15, 16, 17, 18, 23, 24, 25, 26, 27, 28, 29, 30, 31, 32, 33, 34, 35, 36

Updated scratchpad:

DEPTH 0 REASONING:

The user wants to know the format requirements for filing a motion to compel discovery and how signatures should be handled for such motions.

Based on the evaluation of chunks:

- Chunks 0, 1, 2, 3, 4, 5, 6, 7, 8 are highly relevant since they cover general requirements for submissions, motions, signatures, service, and specifically for motions and discovery in TTAB proceedings.

- These chunks contain detailed info about electronic filing (via ESTTA), paper filing exceptions, signature requirements, service requirements, format of submissions (including motions), timing rules, and professionals' responsibilities.

- Additionally, the rules for motions to compel, including required attachments, timing, and certification of good faith efforts to resolve discovery disputes, are specifically outlined.

- Chunks 11-19 mostly cover post-trial and appeal procedures, less directly relevant.

I will select these relevant chunks to provide a thorough answer about how motions to compel discovery should be filed and how signatures on such motions are handled.

DEPTH 1 REASONING:

The user's question asks about the format requirements for filing a motion to compel discovery and how signatures should be handled. Relevant information will likely involve sections on "motions" specifically "motion to compel discovery," filing format, signature requirements, and related procedural rules in TTAB practice.

Based on the large amount and depth of the provided chunks, I identified the following relevant topics and chunks addressing them:

1. Signature Requirements & Acceptable Formats for Motions and Submissions

- Detailed rules for signatures on submissions including motions are in chunks 5, 6, 7.

- These include rules on electronic filing, use of ESTTA, required signature format including electronic signatures with the symbol method "/sig/".

2. Format of Submissions and Use of ESTTA

- Filing requirements, printing format, size, paper submissions, and special exceptions are found in chunks 7, 8, 9, 10, 11, 12, 13.

- Motions generally must be filed via ESTTA, with exceptions requiring petitions to Director with reasons.

3. Motions to Compel and Discovery Motions

- Specific rules related to filing motions such as motions to compel discovery, service, and timing are expected in the portions covering discovery and motions.

- Discovery and related motions are introduced in chapters starting from chunk 400 and beyond.

4. Service and Certificates of Service

- How motions must be served and proof of service with certificates is discussed in chunks 17, 18, 19, 20.

- These include requirements that every submission in inter partes cases, except notice of opposition or petition to cancel, must be served on adversary and proof of service provided.

5. Motions to Compel Discovery Details

- Discovery and motion procedure, filing format, timing, service, and related sanctions are extensively covered in chunks 400 and following.

- These include disclosures, discovery conferences, timing for discovery requests, responses, motions to compel, and sanctions.

From the above, the following chunks are most likely to provide the requested information:

- Chunks 5, 6, 7: Signature rules and filing format including motions.

- Chunks 17, 18, 19, 20: Service of submissions and certificates of service.

- Chunks 400 to 410 plus related portions (401.01, 401.02, 401.03, 408, 410): Discovery rules, motions to compel details.

These cover the format of motions including motions to compel discovery, signature rules, service and proof of service, and discovery procedure and rules governing motions.

Less relevant chunks to the question are routine procedural provisions on oppositions, petitions to cancel, answers, which do not specifically address filing or signatures of motions to compel discovery.

Plan: Select the above relevant chunks and report key procedural points on the format in which a motion to compel discovery must be filed and how signatures must be handled.

DEPTH 2 REASONING:

The user's question is about the format for filing a motion to compel discovery and handling of signatures. Relevant information is likely contained in sections addressing motions, discovery procedures, submission format, signature requirements, and service rules.

Chunks covering signature requirements (5-12) provide detailed rules on legal signatures, electronic signatures, who must sign (attorneys or parties with legal authority), and signature content.

Chunks 0, 4, 7-10, 15-18 discuss the required format for submissions, including motions, the mandate to file electronically via ESTTA, and exceptions for paper filings.

Chunks 23-35 address service of submissions, including requirements for service on all parties, methods of service, and certificates of service.

Finally, discovery-related motions such as motions to compel discovery and their filing details should be in chunks from 400 onwards (although these aren't fully visible here, the rationale included these chunks as likely relevant).

Therefore, chunks 0,4,5,6,7,8,9,10,11,12,15,16,17,18,23,24,25,26,27,28,29,30,31,32,33,34,35,36 are selected as most relevant to provide a thorough answer on the filing format and signatures for a motion to compel discovery.

Returning 28 relevant chunks at depth 2

==== FIRST 3 RETRIEVED PARAGRAPHS ====

PARAGRAPH 1 (ID: 0.0.5.0):

----------------------------------------

104 Business to be Conducted in Writing

37 C.F.R. § 2.190(b) Electronic trademark documents. … Documents that r elate to proceedings before

the Trademark Trial and Appeal Board must be filed electronically with the Board through ESTTA. 37 C.F.R. § 2.191 Action of the Office based on the written record. All business with the Office must be

transacted in writing. The action of the Office will be based exclusively on the written record. No consideration

will be given to any alleged oral promise, stipulation, or understanding when there is disagreement or doubt. With the exceptions of discovery conferences with Board participation, see TBMP § 401.01, and telephone

conferences, see TBMP § 413.01 and TBMP § 502.06, all business with the Board should be transacted in

writing. 37 C.F.R. § 2.191 . The personal attendance of parties or their attorne ys or other authorized

representatives at the offices of the Board is unnecessary , except in the case of a pretrial conference as

provided in 37 C.F.R. § 2.120(j), or upon oral argument at final hearing, if a party so desires, as pro vided

in 37 C.F.R. § 2.129. Decisions of the Board will be based exclusively on the written record before it. [Note

1.] Documents filed in proceedings before the Board must be filed through ESTT A. 37 C.F.R. § 2.190(b). See TBMP § 110.01(a). Board proceedings are conducted in English. If a party intends to rely upon an y submissions that are in a

language other than English, the party should also file a translation of the submissions. If a translation is

not filed, the submissions may not be considered. [Note 2.] NOTES:

1. Cf.

----------------------------------------

PARAGRAPH 2 (ID: 0.0.5.4):

----------------------------------------

The document should

also include a title describing its nature, e.g., “Notice of Opposition,” “Answer,” “Motion to Compel,” “Brief

in Opposition to Respondent’s Motion for Summary Judgment,” or “Notice of Reliance.”

Documents filed in an application which is the subject of an inter partes proceeding before the Board should

be filed with the Board, not the Trademark Operation, and should bear at the top of the first page both the

application serial number, and the inter partes proceeding number and caption. Similarly , requests under

Trademark Act § 7, 15 U.S.C. § 1057, to amend, correct, or surrender a registration which is the subject of

a Board inter partes proceeding, and any new power of attorney, designation of domestic representative, or

change of address submitted in connection with such a registration, should be filed with the Board, not with

the Trademark Operation, and should bear at the top of its first page the re gistration number, and the inter

partes proceeding number and the proceeding caption. [Note 2.] 100-14June 2024

TRADEMARK TRIAL AND APPEAL BOARD MANUAL OF PROCEDURE§ 105

NOTES:

1. 37 C.F.R. § 2.194. 2. 37 C.F.R. § 2.194. 106.02 Signature of Submissions

37 C.F.R. § 2.119(e) Every submission filed in an inter partes proceeding, and every request for an extension

of time to file an opposition, must be signed by the party filing it, or by the party’s attorney or other authorized

representative, but an unsigned submission will not be r efused consideration if a signed copy is submitted

to the Office within the time limit set in the notification of this defect by the Office. 37 C.F.R. § 11.14(e) Appearance.

----------------------------------------

PARAGRAPH 3 (ID: 0.0.5.5):

----------------------------------------

No individual other than those specified in par agraphs (a), (b), and (c)

of this section will be permitted to pr actice before the Office in tr ademark matters on behalf of a client. Except as specified in § 2.11(a) of this chapter, an individual may appear in a trademark or other non-patent

matter in his or her own behalf or on behalf of:

(1) A firm of which he or she is a member;

(2) A partnership of which he or she is a partner; or

(3) A corporation or association of which he or she is an officer and which he or she is authorized to

represent. 37 C.F.R. § 11.18 Signature and certificate for correspondence filed in the Office. (a) For all documents filed in the Office in patent, trademark, and other non-patent matters, and all

documents filed with a hearing officer in a disciplinary proceeding, except for correspondence that is

required to be signed by the applicant or party, each piece of correspondence filed by a practitioner in the

Office must bear a signature, personally signed or inserted by such practitioner, in compliance with §

1.4(d)(1), § 1.4(d)(2), or § 2.193(a) of this chapter.

----------------------------------------